| {ii} |

Masters of Modern Physics

|

Advisory Board Dale Corson, Cornell University Samuel Devons, Columbia University Sidney Drell, Stanford Linear Accelerator Center Herman Feshbach, Massachusetts Institute of Technology Marvin Goldberger, Institute for Advanced Study, Princeton Wolfgang Panofsky, Stanford Linear Accelerator Center William Press, Harvard University Series Editor Robert N. Ubell Published Volumes The Road from Los Alamos by Hans A. Bethe The Charm of Physics by Sheldon L. Glashow Citizen Scientist by Frank von Hippel |

| {iii} |

SHELDON L. GLASHOW

A TOUCHSTONE BOOK

Published by Simon & Schuster

New York London Toronto Sydney Tokyo Singapore

| {iv} |

Touchstone

Simon & Schuster Building

Rockefeller Center

1230 Avenue of the Americas

New York, New York 10020

Copyright © 1991 by The American Institute of Physics

All rights reserved

including the right of reproduction

in whole or in part in any form.

First Touchstone Edition 1991

Published by arrangement with The American Institute of Physics

TOUCHSTONE and colophon are registered trademarks

of Simon & Schuster Inc.

Manufactured in the United States of America

10 9 8 7 6 5 4 3 2 Pbk

ISBN 0-671-74013-X Pbk.

This book is volume one of the Masters of Modern Physics series

| {v} |

vii | ||||

ix | ||||

3 | ||||

10 | ||||

The Mysteries of Matter | 16 | |||

27 | ||||

42 | ||||

55 | ||||

59 | ||||

74 | ||||

93 | ||||

| ||||

THE WORK OF A THEORIST: ELEMENTARY PARTICLES | ||||

109 | ||||

126 | ||||

141 | ||||

164 | ||||

168 | ||||

171 | ||||

179 | ||||

192 | ||||

THE WORK OF A THEORIST: GRAND UNIFICATION | ||||

203 | ||||

Unified Theory of Elementary-Particle Forces | 214 | |||

239 | ||||

248 | ||||

Tangled in Superstring | 252 | |||

257 | ||||

SSC: Machine for the Nineties | 265 | |||

285 | ||||

288 | ||||

291 | ||||

295 | ||||

301 | ||||

| {vii} |

| I |

But in the next few years, the two cultures were thrown together in ways that Snow had not anticipated. The products of science and technology intruded themselves into our daily lives, making it impossible to live in the modern world without tripping over the connections. Science is no longer secreted away in remote laboratories where apparatus bubbles in the night. It is everywhere.

The automobile was the first to invade. With it came not only the social transformation of leisure and work, but it introduced the complexities of fuels, combustion, and chemistry into everyday life. Now the expert was not the only one who knew something. The kid down the block could tell you a thing or two about how cars worked too—even if he knew nothing of Greek mythology or relativity.

Today, we're all so familiar with airplanes and helicopters, with VCRs and computers, with contraceptives and mood-altering drugs, they no longer appear to us as having been created by science and technology. They now take their ordinary places next to your box of breakfast cereal.

We now talk with casual familiarity about our cholesterol levels or about the ecological consequences of ozone holes. Even kids adopt techno-jargon as street slang—blast-off, nuke, clone. People are much more familiar with Stephen Hawking than they are with Stephen Daedalus, even though Stephen King still wins that popularity contest. {viii}

That is not to say that the untrained know the foundations of physics or the concepts that underlie other sciences. When pushed, however, people do find out things. Those who first introduced nuclear power onto the American landscape in the fifties discovered to their surprise that large numbers of technically unsophisticated citizens could learn a great deal about reactors. Similarly, ordinary people have educated themselves about other technologies that play a central role in our environment or that have consequences elsewhere—drugs, abortion, AIDS.

As science and technology become more intricately threaded into our existence, the need for us to understand what it is all about, how it works, and what it does becomes very personal. As the turn of the century approaches, we will no longer be able to look for jobs merely with the strength of our hands or our native intelligence. The intimate way you live your life—the food you eat, the fuels you burn at home or in your car, even how you choose a partner—will depend in part on your technical sophistication.

For these and other, broader cultural reasons, the Masters of Modern Physics series has been launched by the American Institute of Physics, a consortium of major physics societies. The series introduces to the reading public the work and thought of some of the most celebrated physicists of our day. These volumes of collected essays offer a panoramic tour of the way science works, how it affects our lives, and what it means to those who practice it. Authors report from the horizons of modern research, provide engaging sketches of friends and colleagues, and reflect on the social, economic, and political consequences of the scientific and technical enterprise.

Authors have been selected for their contributions to science and for their keen ability to communicate to the general reader—often with wit, frequently in fine literary style. All have been honored by their peers and most have been prominent in shaping debates in science, technology, and public policy. Some have achieved distinction in social and cultural spheres outside the laboratory.

Many essays are drawn from popular and scientific magazines, newspapers, and journals. Still others—written for the series or drawn from notes for other occasions—appear for the first time. Authors have provided introductions and, where appropriate, annotations. Once selected for inclusion, the essays are carefully edited and updated so that each volume emerges as a finely shaped work.

Robert N. Ubell

| {ix} |

| A |

After the War, my father installed a small chemistry laboratory for me in the basement of our house in Manhattan. I had outgrown chemistry sets, and had become intrigued (who knows why?) by the chemistry of selenium. My “research project”—the substitution of selenium for sulfur in the hydroculture of tomato seedlings—led to my selection as a finalist in the Westinghouse Science Talent Search. I began preparing for my career as a physicist as an undergraduate at Cornell University, and subsequently, as a graduate student at Harvard. A capsule biography, which I recently wrote for the 1989 Harvard-Radcliffe Yearbook, summarizes what happened next:

When I came to Harvard in 1954, I discovered, to my horror, that lowly graduate students had nothing like Cornell's “Game Room” to hang out in. Instead, I was soon installed in a windowless warren of tiny cubicles {x} in the dank cellar of Lyman Laboratory. Here, assisted by an array of antique and noisy mechanical calculators, a collection of worn and worthless books, occasionally encouraged by an avuncular nod from my research supervisor Julian Schwinger, I was expected to generate a monumental contribution to Science—with six copies, typed, printed, bound, and on 100 percent (or else!) rag paper. My pet leech offered scant solace.

Four years later I escaped to enjoy a two-year paid European holiday courtesy of the National Science Foundation. [Note added:—Perhaps it was not all vacation. During those years I did the work that would earn me a Nobel Prize two decades later.] My thesis had been duly buried in the Harvard Archives, my leech under the World Tree. While I was abroad, Murray Gell-Mann, the hyphenated guru of elementary particles, mistook me for an East German prodigy and lured me to Cal Tech for what would become a 66-month period of exile in a strange land. I discovered smog, was ticketed for jay-walking, played go, went rock climbing, taught at Stanford and Berkeley, and survived the Filthy Speech Movement.

In tenure and triumph, I returned to Harvard in 1966 with a mere thirty percent cut in salary. Now at last, I could do unto others what Harvard had done unto me! There was still no tolerable pool room in Cambridge, nor is there likely to be one with today's no-smoking ordinances. So it's back to work on the one problem that has always fascinated me—that has passed through the minds of generations of scientists, that was entrusted to me by Schwinger, and that I must entrust to my students to address in the inimitable fashion of the physicist—"What does it all mean?”

Implicit in this tongue-in-cheek essay is the theme of this volume: physics can be just plain fun as well as an obsession. How marvellous it is that we are paid for what we so love to do!

What changes have been wrought since I started my career! Men on the moon, pocket calculators, VCRs—the whole face of science has changed with the emergence of new forefront disciplines such as recombinant genetics and plate tectonics. But the most radical transformations have taken place in particle physics and cosmology, opposite ends on a scale of sizes. When I began my studies, scientists had no firm notion of how the universe began or what an elementary particle is, although in recent years two “standard models” have been developed: one of Big Bang cosmology, the other of particle physics in terms of quantum chro-modynamics and the electroweak model. The macro- and the micro-theories are compatible, both are supported by an impressive body of empirical evidence, and no established data has yet contradicted either theory. Together, these two structures constitute the beginning of a formal theory underlying all there is, was, and will be. It is almost a religion, {xi} with its own Genesis (. . . and then, 15 billion years ago, there was light!), and its own Trinity (the proton: indivisible, but made of three quarks). But we are not there yet. The toughest questions remain unanswered.

How did stars group together in galaxies, which themselves are gathered in clusters and even in clusters of clusters, all of which surround vast and empty spherical voids patterned rather like suds in a kitchen sink? We do not know what constitutes the very matter of the Universe, for stars, clouds of gas, and dust cannot account for its mass. Although most matter is invisible to us, we are aware of its gravitational effects. From the index of 17 fundamental particles, astronomers have (reluctantly, to be sure) driven us to conclude that the universe is predominantly made of something eerie and unearthly, which is almost certainly “none of the above.”

We do not know why particles exist, why they have a particular mass, or why they are subject to certain forces. Our standard model is honest: it tells us that within this context, there are no answers. Moreover, our “grand unified theory” is neither grand (the proton lifetime is wrong), nor unified (gravity is left out), nor is it really a theory (it doesn't solve any of the above puzzles).

Quantum field theory, the marriage of quantum mechanics and special relativity as devised by Dirac, Schwinger, Feynman, Tomonaga, and oth-ers, has served us well for over 40 years, and helped establish our standard model of particle physics. But it has reached an impasse; the theory simply cannot describe gravity, and is therefore unable to explain the earliest moments of the creation of the universe. Quantum field theory also falls short of answering any of today's particle puzzles. Evidently, we need to make a giant step toward a far more powerful conceptual framework. Superstring theory is an ambitious and fashionable attempt to overcome all obstacles by supposing that elementary particles are actually tiny loops of string, rather than pointlike structures. Within the context of superstrings, a quantum theory of gravity emerges naturally. Perhaps someday a theory will be able to answer Isadore Rabi's famous query: Who needs the muon? So far, superstring theory cannot begin to answer the question, but has rather led a whole generation of brilliant graduate students into an increasingly intricate ten-dimensional mathematical morass.

At the 1989 conclave of the International Summer School on Subnuclear Physics in Erice, Antonino Zichichi, its founder and director, asked one of his younger and more abstract speakers, “Can any experiment {xii} or even an imagined experiment, determine whether what you have said is true?” The answer was negative. Zichichi's question is of prime importance in this age where mathematics can masquerade as physics. Julian Schwinger believes in a unified theory of everything, but he (and I) feel that the time is not yet ripe for such hubris.

Particle physicists and cosmologists—who operate at opposite extremes on the cosmic ladder—are among the last prophets of a scientific revolution. We hope for data that will invalidate the theoretical framework we have so painstakingly built. We look forward to a time when the essays in this collection become obsolete because our successors will have formulated a better theory of how things work and what it all means. The future will be chock-full of experimental surprises that will compel us to revamp and improve our standard models and approach more closely the One and True Synthesis.

| {1} |

| {2} |

| {3} |

| M |

Among my chums at the Bronx High School of Science were Gary Feinberg and Steven Weinberg. We spurred one another to learn physics while commuting on the New York subway. Another classmate, Dan Greenberger, taught me calculus in the school lunchroom. High-school mathematics then terminated with solid geometry. At Cornell University, {4} I again had the good fortune to join a talented class. It included the mathematician Daniel Kleitman, my old classmate Steven Weinberg, and many others who were to become prominent scientists. Throughout my formal education, I would learn as much from my peers as from my teachers. So it is today among our graduate students.

I came to Harvard as a graduate student of physics in the fall of 1954. Danny Kleitman, Dave Falk, and I were the Cornell contingent. Steve Weinberg, Laurence Mittag, and Tema Ehrenreich were also of the notorious Cornell crowd. Steve chose Princeton, by which I was rejected; Laurence chose Yale and now teaches engineers at Boston University; Tema (Mrs. Henry Ehrenreich) is part of the history of condensed matter physics at Harvard. During my first year, I collaborated on a paper about nuclear physics with Walter Selove, then a junior faculty member. It was my only sortie into this antique and exotic discipline. Today, Harvard students of the nuclear persuasion must cross-register at MIT or, preferably, switch.

Mostly, I took a lot of courses, including especially the inspired and inspiring lectures of Julian Schwinger, my thesis director-to-be. The late Jun Sakurai, as a precocious Harvard senior was a classmate, as was T.T. Wu, the noted particle physics presence. Among the other courses was John Van Vleck's famed group theory. Over the years, it has evolved into an entirely unrecognizable but absolutely essential course in group theory for particle physics, now taught by Howard Georgi. I also endured the first course ever taught by Paul Martin—relativity: special, general, and incomprehensible.

Requirements were to be met in two foreign languages, and in what for me was yet a third, laboratory work. French was a pushover, but my passing the Russian exam was a mean trick. There is no longer any foreign language requirement in our department. Physics, the world over, is done in Pidgin English. A graduate version of the college's expository writing course might be a useful requirement today.

Since I had already experienced the joys and sorrows of dropping lead bricks on fragile geiger tubes at Cornell, I had no intention of doing yet another laboratory course. I exercised my option to take an extra oral examination in methods of experimental physics. Ken Bainbridge and Curry Street were my examiners. I passed. In the intervening decades, to my knowledge, no one else has chosen this route of avoiding the lab course.

The time had come to choose my thesis director. About 10 of us approached Professor Schwinger simultaneously: Kleitman, Falk, {5} Marshal Baker, Charlie Sommerfield, Harold Weitzner, Ray Sawyer, Charlie Warner, Bob Warnock, me, and perhaps another. The master assigned us the problem of computing the electron propagator in Coulomb gauge. Collaborating, we appeared a few days later, en masse, with the solution. Schwinger's strategy had failed; he had to invent a different problem for each of us.

Charlie Sommerfield got the problem of how an electron is affected by a magnetic field. He was to show that the original fourth-order calculation of Karplus and Kroll was flawed. Eventually, he was the first to compute the anomalous moment to sixth order. Now at Yale, he leaves the question of yet higher corrections to others.

Danny Kleitman, as I recall, was assigned the properties of an electron in an electric field. Eventually, he completed his doctoral research partly with Schwinger and partly with Roy Glauber. One of Danny's accomplishments was the derivation of the analog to the Gell-Mann-Okubo mass formula in Schwinger's global symmetry scheme. The formula doesn't work, and global symmetry is all wet. Danny has since become a great mathematician, and my brother-in-law as well.

I was to work on the possible synthesis of weak and electromagnetic interactions by means of the newly invented Yang-Mills, or non-Abelian, gauge theories. It was a bit premature. The experimentalists had not yet identified the VA form of weak interactions. The old dogma (STP) based upon a silly theory and three incorrect experiments, had only recently given way to VT. One false experiment had not yet been exorcised, and furthermore, parity violation had not yet been discovered.

During my years at Harvard, I particularly remember interactions with Wally Gilbert, Chuck Zemach, Ken Johnson, Irwin Shapiro, George Su-darshan, and Abe Klein. From Abe, all I wanted was a reading course in field theory. “Junior Fellows are not obliged to teach,” more or less, was Klein's comment. Irwin was Roy Glauber's first student. He maintained a sort-of salon on Shepard Street, and gave many large parties. Chuck was privy to the last and unpublished portion of Schwinger's great opus: TQF, or the theory of quantum fields. Unfortunately, I could never understand it. In my third year at graduate school, Chuck and I shared an apartment in Brighton. We gave at least one glorious party. Nodding off early in the evening, I was to discover, several days later, that Roy Glauber had put a frankfurter in my flute. Wally Gilbert, at Harvard, was completing a thesis under Salam at Imperial College. It was something about backwards dispersion relations, as I remember. He was, by far, the most mathematical of physicists I had ever met. This was prior to our {6} acquisition of Arthur Jaffe. How remarkable was Gilbert's transition to a hands-on biological science, and how successful as well.

By the spring of 1958, I had put together a respectable thesis, and had been awarded an NSF postdoctoral fellowship. I decided to spend a year at the Lebedev Institute in Moscow under the direction of Igor Tamm. This was easier said than done. My thesis exam took place in July at the University of Wisconsin. The committee consisted of Schwinger, Martin, and Frank Yang. The examination consisted partly of a debate between Schwinger and Yang. I had tacitly assumed that the electron-neutrino and muon-neutrino were distinct particles, for three reasons: From the point of view of a gauge theory, the universality of weak interactions demanded separate neutrinos. Secondly, Gary Feinberg, my old high-school buddy who was then and now at Columbia, had shown that the intermediate vector meson hypotheses, in a one-neutrino theory, led to an unacceptable rate for μ → eγ. Most importantly, the two-neutrino hypotheses was an integral part of Schwinger's teaching. After Schwinger had convinced Yang that my assumption was physically meaningful and predictive, I was excused so that the committee could decide my fate. Three years later, at Brookhaven National Laboratory, Lederman, Schwartz and Steinberger were to prove the truth of Schwinger's two-neutrino scheme.

Since my Russian visa had not yet come, I accepted an invitation along with Danny Kleitman to visit the Niels Bohr Institute for the interim. The interim turned into a very productive two-year stint at Copenhagen and at the European research consortium CERN. The Russian visa never did come, despite the frequent assurances of several Russian consuls that it would appear “tomorrow.”

I shall not dwell upon my seven-and-a-half year period of exile from Harvard. Let me jump to January 1966, when I moved from Berkeley to Harvard to continue my fruitful collaborations with Sidney Coleman, my first, best, and somewhat unofficial student. He and I had already successfully explored the electromagnetic properties of baryons in Gell-Mann's unitary summetry scheme. We had also just missed the discovery of the Gell-Mann-Okubo mass formula and of the Cabibbo current. The period 1966–1970 was terribly dull. Although Steve Weinberg, in 1967, brilliantly synthesized the Copenhagen SU(2) × U(1) electro weak model with the Higgs-Kibble mechanism of spontaneous symmetry breaking, no one seemed to notice or care.

In 1970, we acquired two spectacular postdoctoral fellows, John Iliopoulos and Luciano Maiani. Together, we discovered the GIM mechanism {7} (for Glashow-Iliopoulos-Maiani), an important ingredient of today's electroweak theory. However, our prediction of the existence of a new kind of matter, charm, was not generally accepted. At this point, I took a leave of absence to become a visiting professor at the University of Aix-Marseille, along with John and my student Andrew Yao, now a well-known computer scientist. We were able to remove some, but not all, of the infinities plaguing electroweak models. It was at Marseille that Tini Veltman informed us of the signal accomplishment of his young student, Gerard 't Hooft: the proof of the renormalizability of the spontaneously broken electroweak theory. Things were beginning to happen quickly, so I hurried back to Harvard. In September 1971, I met my wife-to-be as we were shucking corn for a dinner party at the Kleitman's home. Joan, and Danny's wife Sharon, are two of the four notorious Alexander sisters. Meanwhile, experimenters worldwide were racing to discover the predicted neutral currents, and Harvard acquired five very promising young particle theorists: Howard Georgi, Alvaro de Rujula, Helen Quinn, Tom Appelquist, and the precocious graduate student, H. David Politzer.

By 1973, neutral currents were discovered by the CERN group, and were rapidly confirmed at Fermilab by a group led by our very own Carlo Rubbia. Moreover, Carlo's group produced convincing evidence for the existence of a new form of matter, looking tantalizingly like our predicted charmed particles. The situation at the time was reviewed in the form of a play presented in the Jefferson Laboratory on December 3, 1973. Alvaro De Rujula was moderator (an experimentalist), Helen Quinn was speaker (a conservative theorist), Howard Georgi was a talking computer, and I played the role of a model builder.

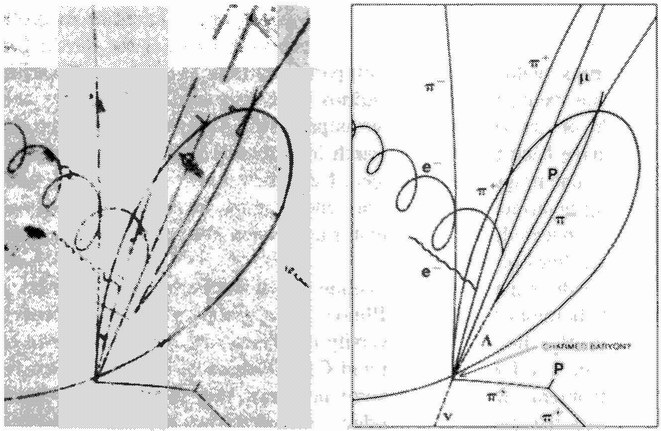

Roy Weinstein, later the Dean of Science at Houston, invited me to speak at the annual convocation of Experimental Meson Spectroscopists at Northeastern in April 1974. My talk was titled, “Charm: An Invention Awaits Discovery.” I offered to eat my hat if charmed particles were not found before the next such meeting. Moreover, the discovery would be made, I claimed, either in neutrino physics or in e+ e– physics. Sure enough, the J/ψ particle was discovered in the November revolution in 1974, simultaneously at Stanford and at Brookhaven. The Harvard theory group was convinced that charm had been discovered. Indeed, Appelquist and Politzer had essentially predicted the appearance of a charmonium resonance in e+ e– annihilation. However, the rest of the world was not convinced. As far as I can tell, only Sakharov's group in the Soviet Union, John Iliopoulos in France, and the guys at Cornell shared our {8} confidence and enthusiasm. Not even the observation of a neutrino-produced charmed baryon by Nick Samios in March 1975 convinced the doubters. In fact, in early 1976 a group at the Stanford Linear Accelerator Center (SLAC) published a paper describing their failure to find charm. Fortunately, I was able to convince Gerson Goldhaber to go back and have a second look. He took my advice and by the spring of 1976 charmed particles were finally found in great numbers at SPEAR with properties that were expected of them. At the 1976 meeting of the Conference on Experimental Meson Spectroscopy, I was not to eat my hat. Rather, small and malodorous candy hats were distributed to the participants.

A theory of strong interactions, quantum chromodynamics, had appeared on the scene. It, too, was a non-Abelian gauge theory, but one acting on the hidden variable called color. The theory had many roots and no single discoverer. Gell-Mann's quarks were, of course, essential. So were the observations of Dalitz and Morpurgo on the success of the quark model under the pretense that quarks behaved as bosons. Nambu and Greenberg suggested the notion of a hidden color degree of freedom. One of the crucial steps was the discovery of the asymptotic freedom of non-Abelian gauge theories. This was the key to the understanding of the success of the quark patron model of deep inelastic lepton scattering, and of the narrow observed width of the J/ψ. Asymptotic freedom was discovered by David Politzer at Harvard, and simultaneously by a group at Princeton.

Quantum chromodynamics and the electroweak theory comprise what is now known as the standard theory of elementary-particle physics. It appears to offer, in terms of 17 arbitrary parameters, a complete and correct description of particle phenomenology. There are no loose ends—no observed phenomena that are incompatible with the theory.

At least there were none until March of 1984. CERN is the world's center of high-energy physics for the simple reason that it has made available, for more than a decade, the world's highest energies. The payoff came in 1983 with the discovery at CERN, by Carlo Rubbia and his gang of 137, of the long-sought weak intermediaries W± and Z0. These particles, too, seem to behave just as theory says they should. However, the latest CERN data alleges to reveal an anomaly. At last, there is something that appears inexplicable in terms of the standard model. Surprises, which have been so much a traditional part of the particle game, may come again. Let's hope so. {9}

Author's note: Alas, it was not to be. Better data and more careful interpretation reveal no discrepancy with theory. The standard model reigns supreme. We shall soon see what larger accelerators, now beginning to operate, will have to say.

In 1927 one of my Harvard predecessors, Percy Bridgeman, wrote: “Whatever may be one's opinion as to the simplicity of either the laws or the material structure of nature, there can be no question that the possessors of some such conviction have a real advantage in the race for physical discovery. Doubtless there are many simple connections still to be discovered, and he who has a strong conviction of the existence of these connections is much more likely to find them than he who is not at all sure they are there.”

| {1} |

| I |

Sadly, for five and a half years, I was a subject of American repression, forced to live the hard life of a bachelor in internal exile at the far reaches of the nation, California. I was exposed to starvation in Pasadena (whose restaurants are firmly closed on Thanksgiving Day), to earthquakes in Palo Alto, and to the Filthy Speech Movement in Berkeley. Living from hand to mouth in the land of the setting sun and the silicon goddess was precarious, and I leapt at the opportunity to escape to the slush in January of 1966, for a mere 30 percent cut in salary. All this is history, but even my dark cloud has a silver lining. Few have survived for so long in such a strange land, and have returned practically unscathed. I have become an expert in the ways of the West, a veritable Margaret Mead of California, the first exobiologist with on-the-job training.

Many great discoveries were made in California before my time: artificial elements, cyclotrons, gold mines, and the Pacific Ocean are examples. Let me turn to more recent events in the history of my own discipline, elementary-particle physics.

Many Californians are of Basque ancestry, haying been brought to California as sheepherders. Richard Feynman, not himself a Basque sheepherder, hailed from Far Rockaway, which is not all that far from Sheepshead Bay. For this reason, Feynman was the first person to {11} compute the Lamb shift, but he got the wrong answer. The correct result was obtained by my thesis advisor, Julian Schwinger, who only subsequently moved to California, not, at last report, to herd sheep. Feynman's diagrams, the key to his computational skills, have become a mainstay of the decorative arts. They are a prime example of what we physicists call “spinoff”—serendipitous contributions to high technology which are the inevitable accompaniment to abstract thought, the ultimate dollars and cents justification of the leisure of the theory class, the true secret of American know-how. Teflon, Tang, and artificial soda water are other convincing examples of spinoff, gifts of pure science to impure sinners. Who would deny us a mere $5 billion to build the Superconducting Super Collider and continue our grand tradition?*

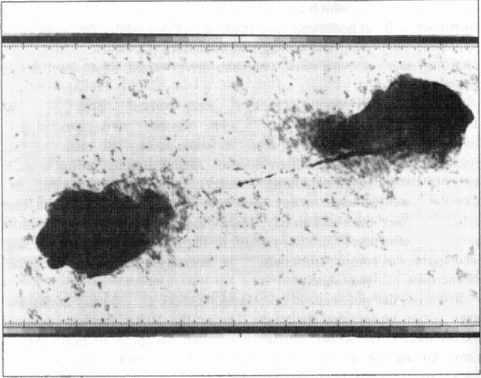

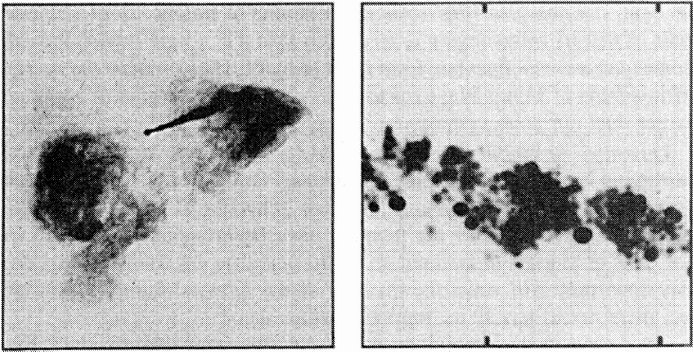

Donald Glaser is another California achiever. Long ago, while pondering the curious behavior of his Nth beer, he concocted the bubble chamber, a notorious and dangerous instrument which led to the discovery of hundreds of new and unwanted elementary particles. This produced a profound dilemma: Were all these particles equally, and hence not very, elementary? This was the view of Geoffrey Chew, leader of the renowned school of Maximal Analyticity and defender of Nuclear Democracy. The only alternative was quarks, which not even Murray Gell-Mann could correctly pronounce all of the time. In the end, quarks emerged victorious and even managed to purge themselves of their more radical fringe, the Quark Liberation Front, and to implement a successful affirmative action program with respect to color. It sounds like a happy story, but remember this: Nuclear Democracy is dead, as dead as the nuclear family, and the population explosion of elementary particles continues unabated. All this because of Dan's bubble chamber. The moral: Never do today what might never get done tomorrow.

Understandably, Luis Alvarez soon tired of the many new particles being discovered in his bubble chambers. “Seen one, you've seen them all,” he said. Thanks to his seemingly Spanish surname, he set off to Egypt to invent the CAP scanner: computer assisted pyramidology. Heroically, utilizing the most powerful cosmic rays, he searched for secret chambers in the great Pyramid of Cheops, and dreamed of the untold wealth of the Pharoahs that might be his. The search was in vain, and Alvarez returned to California, a broken and embittered shell. Together with his son, he conspired to reveal the secret of how the dinosaurs died, and he predicted, beyond a reasonable shadow of doubt, that the same {12} would happen to us. In precisely 13 million years (and this, incidentally, explains the mysterious but well-founded fear of the number 13, or tristadekaphobia, to physicians and songwriters); in precisely 13 million years the Sun's dread, but invisible, spouse Nemesis will return to reap its destructive harvest, and to end the world as we know it. Foolish astronomers, even now, are searching the skies for Nemesis. When they find it, perhaps they will foretell the precise day, hour, and minute of our species' inevitable demise. Because Alvarez had to tell it as it is, all human hopes and aspirations for the future have been crushed.

Kendall, Friedman, and Taylor, laboring at the Stanford Linear Accelerator Center, a small atom smasher merely two miles long, patiently repeated the experiments Ernest Rutherford performed 56 years earlier, but at a somewhat higher energy. Just as their predecessor discovered the atomic nucleus, they found evidence for the existence of pointlike particles within the very proton, pointlike particles they pronounced par-tons. They were, of course, mistaken. Their pointlike particles were none other than the quarks that Gell-Mann had prophesied but abandoned years before. Despite their error, Kendall and Friedman have escaped exile, but sad to say, Dick Taylor still languishes in the golden state.*

We come, inevitably, to the great Goldhaber clan, America's answer to the Swiss Bernoullis, and to Gerson of California in particular. It was the cold winter of '76, and most citizens were scrimping and saving to buy fireworks with which to celebrate our Bicentennial. The Goldhabers were far too busy to concern themselves with the decisive battle being fought between the charmed quark and the Wicked Witch of the West. The Witch had gone so far as to deny charm's birthright by official proclamation in Physical Review Letters. Gerson, though he was at Berkeley, was our most valued double agent. We arranged a last clandestine meeting in April in Wisconsin. The Experimental Meson Spectroscopy Conference was scheduled for June. If charm were not found by then, they would force me to ingest my chapeau. Time was short. Our desperate plan succeeded, and charm was revealed on schedule. The Wicked Witch of the West had been not merely conquered but converted, and she designated Gerson as California Scientist of the Year. He was also awarded the highest honor bestowed by the University of California—an orange circle parking sticker. {13}

All these great discoveries notwithstanding, it is not surprising that some experiments, especially in California, do not stand quite so well. I have written a small poem, somewhat distasteful, that celebrates some of these results, to wit, the hunt for fractional or magnetic charges and the discoveries of remote viewing and of anomalons. The poem ends upon a more speculative note. But first, a little background:

James Clerk Maxwell did not believe in the existence of fundamental particles carrying charge: neither electric nor magnetic. Nevertheless, by recognizing the self-evident symmetry between electricity and magnetism, as in the old saw “Why is a cat rubbed against a window like a compass?” he set us upon the road leading inexorably to the great grand unification of today and to microwave ovens. In 1896, the renowned Irish physicist George Johnstown Stoney, flushed by his rediscovery of the wheel which brought the Industrial Revolution to Ireland, named the elementary carrier of electric charge after his young lady friend Amber. Once named, the electron was observed in the laboratory only one year later. Maxwell would have been outraged, had he not been dead for almost two decades.

This brings us to Paul Adrien Maurice Dirac, once unanimously acclaimed as the World's Greatest Living Physicist, whose famous textbook on quantum mechanics was rejected by the prestigious Cambridge University Press. In retaliation for this slight, Dirac sent his book to the rival Oxford Press, and transferred his allegiance to Florida State University in Tallahasse. In between, he realized that electromagnetic symmetry demanded that Maxwell could neither be half-right nor half-wrong. The existence of the electron demanded that there be magnetic charges as well.

All of Dirac's predictions have come true. For this reason, experimenters discover magnetic monopoles every few years, only to retract them later for technical reasons. My colleague, Ed Purcell, is the only experimental physicist who has looked for magnetic monopoles but never found one. For this, he was awarded the coveted Nobel Prize in physics.

Quarks have fractional electric charges, but you are not supposed to see them. Nonetheless, many experimenters search for fractional charges. They hope to incite yet another revolution on physics. Observable fractional charges and Dirac magnetic monopoles bear a curious relationship to one another: either one of them could in principle exist. One but not both. Thus it is remarkable that these two mutually exclusive entities have apparently both been reported by scientists working at the same laboratory in California. {14}

Of the alleged observation of fractional charges, perhaps the less said the better: Experiments are a decade old and have neither been confirmed nor retracted. The one magnetic monopole candidate appeared on St. Valentine's Day, 1982. A year later, I sent the following telegram to the investigator, Bias Cabrera:

Roses are red; violets are blue. The time is now For monopole two. |

I have not yet received an answer.

Certain theorists, known as neonatal cosmologists, have clarified the subject by establishing two alternative scenarios. (A scenario, incidentally, is what you call a theory when you've got more than one.) In one scenario, the universe was created chock-full of magnetic monopoles. There were so many that billions of years ago the universe ceased to exist. In the second, perhaps more plausible, scenario, there is just one magnetic monopole in the entire observable universe—perhaps it passed through Cabrera's apparatus on St. Valentine's Day in 1982.

A third remarkable result emerged in the environs of Palo Alto, at SRI which was known as the Stanford Research Institute, until its goals diverged from those of the university. In high school, I was a fan of J.B. Rhine and of his demonstrations of extrasensory perception at Duke University. While his experiments were inconclusive, those of Putoff and Targ at SRI were convincing enough to be published in the Proceedings of the IEEE. The human talent of remote viewing permits the uninformed individual placed in a sealed room to describe the observations of a distant colleague. A program of experiments designed to confirm this effect was proposed by my colleague Paul Horowitz in collaboration with the Amazing Randy. It was deemed to be unnecessary by the SRI scientists.

Finally, I come to the exotic discipline of nuclear physics, a branch of physics that is not actively pursued at Harvard University. Few of my colleagues realized that there are precisely 3 × 7 × 19 known species of atomic nuclei that live for at least one year. Even at MIT, interest in nuclear physics appears to be waning, for they did not even submit an expensive proposal to the latest nuclear accelerator sweepstakes. One reason for this deplorable lack of interest in a fundamental science is the fact that the latest half dozen synthetic chemical elements, quite unlike plutonium or curium, have not even been given names, only numbers. “Do not fold, spindle, or mutilate me!” cry their neglected nuclei. It is in this context that the nuclear scientists of California have come to the {15} fore to fill a much needed gap in the literature. They have discovered a new effect, indeed, a new form of nuclear matter that has no conceivable rational explanation: the anomalon. Once again, like the fractional charge, the monopole candidate, and remote viewing, the anomalon is a peculiarly Californian experience. Like so many allegedly great California wines, it does not travel well.

The last experiment I shall discuss is the reported observation of the zeta particle that was tentatively announced at the International Conference on High Energy Physics at Leipzig in the summer of 1984. The results emerged from the Crystal Ball detector operating at the German electron synchrotron center in Hamburg. The Crystal Ball detector was designed and created in California by Califomians. The director of the laboratory and I agree that the discovery of the zeta particle would be, if correct, the most significant event in particle physics in the past decade.*

Now, a poem:

To Abalone Unbound What with chromodynamics and electroweak too, Our standardized model should please even you. Tho' once you did say that of charm there was none, It took courage to switch, as to say, earth moves, not sun. Yet your state of the union penultimate large Is the last known haunt of the fractional charge, And as you surf in the hot tub with sour-dough roll, Please ponder the passing of your sole monopole. Your olympics were fun, you should bring them all back— For transsexual tennis or anomalon track, But Hollywood movies remain sinfully crude, Whether seen on the telly or remotely viewed. Now fasten your sunbelts, for you've done it once more. You said it in Leipzig of the thing we adore, That you've built an incredible crystalline sphere Whose German attendants spread trembling and fear Of the death of our theory by particle zeta, Which I'll bet is not there, say your articles, later. |

| {16} |

| T |

Before we talk about physics, I'd like to talk to you about some of the personal things you wrote about in your recent autobiographical book, Interactions. In the book, you discuss your transformation from a “nerd”—I believe that is what you called yourself—at the Bronx Science High School in New York to a Nobel Prize winner. Throughout the book, you credit your father, a plumber and a craftsman, for instilling in you a lifelong curiosity about how things work. How do you manage to keep the fires of curiosity burning after all these years of scientific investigation?

Glashow: That is a difficult question. You mention “nerds.” Of course, “nerds” are an endangered species today, and I'm a great defender of them. I go hunting for “nerds” because they make promising graduate students, eventually.

Now, how could I still be interested? I got into physics because I found it so much more interesting than anything else. I was not very good at sports . . . so I picked physics as something that was a lot of fun. And it still is. It's very exciting and certainly addictive.

I think I'm not as smart as I used to be, but I'm certainly at least as much interested in these questions. They are always the same questions: What is it all for? What is it all made of? How does it all work? They still fascinate me. {17}

Did you look at, say, a radio when you were growing up, and want to take it apart?

Glashow: Oh, yes. I took clocks apart, I took radios apart. But I could never get them back together again. There would always be something left over that didn't fit and it wouldn't work. So that's why I'm not in experimental physics. I had the most marvelous electric train set, vintage 1930s, which I managed to take apart and I could never get back together again. My experimental life is full of tragedies.

So you kept taking things apart until you reached the subatomic level, so to speak.

Glashow: So to speak.

In your book, you explain the intricacies of subatomic particles, bosons, and quarks, but you also tantalize us with a story about your dad and how he survived falling into a vat of molten lead. Tell us more about that.

Glashow: My father came to the U.S. from Russia in 1905. He first took all kinds of laboring and construction jobs. At one point, he was building a house, or putting plumbing into one. They used molten lead on the joints and he simply fell into the tank of lead. He explained to me that he was protected by a tiny layer of air, so he didn't get seriously burnt.

I was impressed by the physics and by the fortuitous accident in which nothing serious happened.

Your father came through Ellis Island during the wave of immigration. What kind of transformation went on there? Some came out with names that were not theirs.

Glashow: Our name in Russia was Glukhovsky. Neither the immigration officer, nor my father thought that that name would do in America. So they had an amicable discussion and they puzzled things out—in a totally friendly fashion, so my father said—and came up with this bizarre name, Glashow. Now there are hundreds of Glashows.

Tell us about the driving force to succeed that often comes from being of immigrant parents. You have two brothers, one of whom is a doctor, and the other a dentist, both very successful. Did the expectations concerning what is success and what is not affect you as a boy?

Glashow: My parents were very concerned about how well I did in school as I am with my kids. But I don't think it was the parental influence as much as the environment of our middle-class, half-Jewish, half-Irish {18} Manhattan neighborhood. There was a strong desire to succeed, to pull out of our mean existence. The other children too have been very successful—many of them doctors, dentists, lawyers, and scientists. The whole circumstance was one that emphasized learning.

Just as there is, today, a strong tendency not to stand out, not to accomplish anything, and to not be a “nerd,” at that time it was not so much the desire to be a “nerd,” but the desire to triumph in school.

Education was the vehicle for upward mobility.

Glashow: Absolutely.

You've written that there is no intellectual pursuit more challenging than physics. What is it about physics that makes it so difficult?

Glashow: No, it's not a difficult science. I think modern biology is much more difficult: you have to remember the names of all kinds of god-awful chemicals. You don't need that in physics. The thing about the kind of physics I do—which is fundamental physics—is that we don't know the rules. It's a contest, a game. It's a kind of gambling game, where you put your money where your mouth is concerning what you think is the way nature works. Then, if you're lucky, you get proven right. There is no greater feeling than winning this bet with nature.

Once upon a time, my buddies and I figured out that there had to exist a fourth kind of quark, and 10 years later it was found. That feels pretty good. It's the kind of feeling that I've had a few times in my life. It's a challenge, a game. It's like going to a magic show and figuring out how the tricks are done. It's like reading a detective story and trying to anticipate the ending. It's all of these things. It's a very human activity, except that it is focused on one simple question: how does it all work?

But your detective story presupposes you possess the language of very advanced mathematics?

Glashow: It presupposes the knowledge of not so advanced mathematics. I can't understand physics, unless I understand mathematics; I can't understand chemistry, unless I understand physics. This is a very important fact because, as you know, Harvard is divided into three parts: its sociologists, its humanoids, and its scientists.

At Harvard, you get to talk to people in other disciplines. But scientists must enter the others' turf because other scholars deal with horizontal disciplines where, in a sense, they possess shallow knowledge of a wide variety of things. But ours is a vertical discipline where everything depends upon everything else. They never know science. We know a little {19} bit about literary criticism, or about history, or about ecology or about sociology. We're always compelled to discuss things or argue things or enjoy conversations on their turf, never on ours, because they lack these skills.

It's true we live in two societies and in a sense it's not true. There is one society: there are those who are literate and understand things, and there are the rest, who don't. Unfortunately, two-thirds of Harvard misses the best part of human knowledge.

Perhaps now is a good time to mention your plea for scientific literacy among students.

Glashow: It's a mess. But illiteracy is not just in science, it's not only in math, as you know, but most are illiterate about history, too. I would venture to say that less than half the population of the U.S. knows the relative time order of the American Revolution and the Russian Revolution. Very few people can identify Rome on a map of the world.

It's worse in science. Some try to improve science education. The National Science Teachers Association is very ambitiously attempting to unify all of science education. All of science can fit together into a unified meaningful whole, instead of teaching bits and pieces, here and there.

Why are there so many talented mathematicians, originally trained in physics, but comparatively fewer physicists who come from mathematics?

Glashow: Generally, the tendency toward abstract mathematics is irreversible. There are physicists who move into more abstract mathematics circles. Einstein is a good example. Heisenberg was another. They began with down-to-earth questions. They do quite well at solving those problems and then move on to more abstract ones. It's very rare to go the other way. Physicists often move in another direction. There has been a very large emigration of physicists into biology. Walter Gilbert is an example, and there are a dozen others, of those who began as physicists and moved into biology.

Let's turn to the need for university professors to teach as well as to do research. You teach a “Core” course at Harvard in physics for undergraduates. I studied freshman physics with a friend of yours, Leon Led-erman. I remember one of his lectures about the conservationist energy which involved a 200-pound brass sphere suspended from a wire from the ceiling in the lecture hall. Lederman walked to the side of the lecture hall and very slowly and carefully placed his back and the back of his head against the wall. Then he had an assistant move the sphere near {20} him. He grabbed the sphere between his hands and brought it right to the tip of his nose and very gently released the sphere and let it slip through his hands. The sphere, of course, swung across the room in a great arc to the far wall, swung back and stopped short, in what seemed just microns, in front of his nose. We all gasped and applauded madly. Lederman was always doing things like that.

Glashow: I often wondered where Leon got that funny looking nose .. .

Making physics fun for us.

Glashow: That was a demonstration I witnessed in class in Cornell in 1950. I also perform it from time to time.

Sometimes we ask a graduate student to lie down on a bed of nails and smash a large cinder block over his chest. Once I hid a plastic container of ketchup on the student's chest and he got up, covered with “blood.” But it's hard to get graduate students willing to sacrifice their shirts.

You have said that it is important for a teacher to be a researcher to enliven theory with practice.

Glashow: I don't think there are clear boundaries between teaching, research, and learning. As we teach, we learn, and as we do research, we present the research in teaching. As students respond, we learn how better to do the research. It's all folded together. That's what major American universities are all about. They mix these activities, and so they should.

In your “Core” course, what would you like your students to come away with ?

Glashow: I would like them to be excited about, and to understand a little bit of, how it is that people have been able to understand (to the extent they do) what matter is made of, what goes on in a brick as you get ten times closer, as you blow it up in size by a factor of 10, then another factor of 10, then another and another and another; to understand exactly how it's put together.

And, conversely, if you imagine lots of bricks, the whole world, the solar system, the galaxy, the universe itself, to reconcile the birth and evolution of the whole of the universe with the properties of matter on a very elementary scale.

At the moment that's what it is all about: the physics of the very large and the physics of the very small have come together. The snake is in {21} the process of eating its tail, and it's very exciting to be around at this time. Everything is finally being put together.

Could you tell us something about what is going on in a subatomic level—what the weak force is, are there things smaller than quarks?

Glashow: That is a lovely question. Every time I get asked the question I wonder “Does he understand air pressure? Does he understand why Boyle's law is true? Why there is a spring in the air?” Many times the answer is no, and if it's no, it's very hard to go further and explain what the weak force is, what the strong force is.

Once upon a time (about the 1930s) we realized that all of the many different displays of force and motion are reduceable to just four forces which we call, briefly, the strong and the weak nuclear force, gravity, and electromagnetism. Actually, gravity and electromagnetism are about all you need for most anything.

Plumbing, my father's specialty, depended on gravity because water goes down, and it depended on electromagnetism because it explains how you wipe a joint and why copper is and what it is, and everything else about everything you see, feel, smell, touch, or do. Ultimately, you do need the other two forces. The strong and weak nuclear forces. They have to do with the atomic nucleus and radioactivity. But they're important because, if you didn't have a nucleus, you wouldn't have an atom, and we wouldn't have anything else.

So the strong and weak forces have to do with the atomic nucleus. What we who won the Nobel Prize some years ago (and a number of other people who didn't) realized is that two of these four forces are really different aspects, different avatars, of the same underlying equation or system; mainly, that the weak force and electromagnetism are really one.

So, in a sense, we've reduced the number—and only in a sense—of forces from four to three, suggesting a further reduction from three to two and, of course, some ultimate unified dream of Einstein—one. But we ain't there yet.

So much of experimental and theoretical work in physics depends on giant pharaonic-sized machines. Will these mega-machines help you in your work as a theoretician?

Glashow: We depend on experimental information, and all these wonderful things we know about nature and the universe, depend upon telescopes, X-ray observatories, accelerators, and other devices. So I am {22} tremendously excited about the new accelerators that are to come into operation in Europe. I'm even more excited about the Waxahachie initiative, the Gippertron—Ronald Reagan's accelerator in Texas.

I didn't know it was the Gippertron.

Glashow: Not officially. It's the Ronald Reagan Center for Particle Physics. If it's funded . . . .

Some scientists think that if we take the billions of dollars that might go into that machine and put the funds into something else . . .

Glashow: Well, I don't see why they want to take my money away. I agree that science is underfunded and should be doubled. That's just about what we're asking for in particle physics. We can build the Superconducting Super Collider (SSC) with double the present budget for high-energy physics. As far as the other scientists go (who are doing wonderful work), their budgets should be doubled, too. But I didn't want to build my machine by taking away their funds. I doubt whether they want to do their work by taking away our money.

Is it more difficult to persuade Congress to support this project because we're not going to get, say, Teflon out of it?

Glashow: Teflon was pretty good. That came from the atomic bomb project. Did you know that? In separating uranium, which was something necessary to do in order to get U-235 to make bombs—which is what was wanted in those days—they performed experiments with heavy gases, uranium gases. You make a gas out of uranium by combining it with fluorine. One of the things that came out of the Manhattan Project was, in fact, Teflon. I'm sure that all sorts of wonderful technology will flow out of building the SSC: superconducting energy storage, mass transit, tunnel development, all kinds of technologies at the cutting edge.

I detect that you may be a little bitter about having to share your Nobel Prize, unlike in Madame Curie's day. Now there seem to be two and three people who share the prize.

Glashow: It's true the person who gets the whole prize gets three times more money than a person who doesn't and has to share it with a couple of other people. In fact, you can even get a quarter of the money.

But the money is not the issue with the Nobel Prize. It's just a queen for a day or a king for a day. It's really quite wonderful, a great honor. It's nice to be honored. But I'm very happy that I shared my prize with two good friends, Steven Weinberg and Abdus Salam, who were, and remain, my very good friends. {23}

How are the Europeans doing in theoretical physics compared to us?

Glashow: It's hard to pin that down exactly. At the moment, the best experimental facilities are in Europe and that's very inspirational to European physicists, so they do very well. The Italians do superbly, the French do very, very well in condensed-matter physics. Everybody's doing well. The* Russians are marvelous. It's not a competitive game. It's always been the most international sport of all—the pursuit of physics.

... Physics was international in the seventeenth century and so it is today. We treasure our international fellowship very much and we rarely try to say, “This was done in America, this was done in Europe, this was done in Russia.” It was done by us, working together.

There are very few women in theoretical physics. Is there anything that can be done about it?

Glashow: That's changing and yes, there are things you can do about it. The solution is to have women who are interested in physics learn physics at an early enough stage. But there are lots of women and they do very well. Some have been eminently successful. So it's changing. At Harvard we have 25 or 30 percent women in my field. We don't yet have 50 percent.

| {24} |

| {25} |

| {26} |

| {27} |

| W |

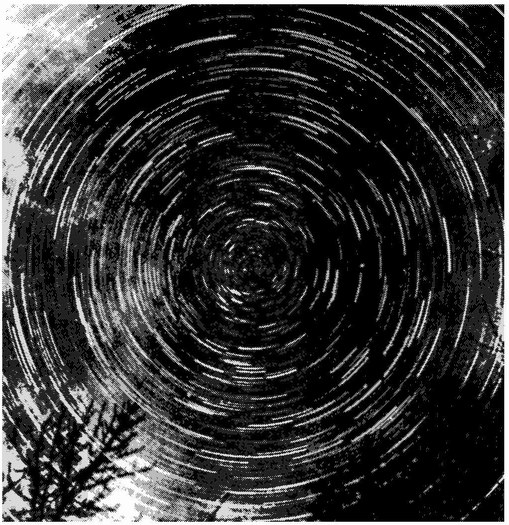

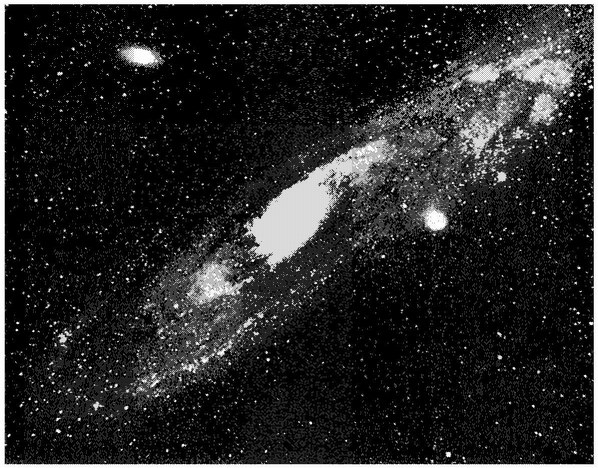

Early societies discovered that the stars and the Milky Way, the entire firmament containing the 12 principal constellations, seems to rotate rigidly about the earthbound observer. Each star, in the course of an evening, moves along an arc of a circle. The photograph in Figure 1, made with a long exposure, illustrates this elementary truth. To the ancients, this is evidence for a fixed and immutable celestial sphere upon which the stars are attached, and of its daily rotation about the Earth.

The Greeks believed that various forms of matter on Earth could be explained as mixtures of four essential ingredients: fire, water, Earth, and air. There was a fifth fundamental material, the “quintessence,”

| {28} |

|

Figure 1. A long-exposure photograph of star trails around the north celestial pole. |

explained the heavens. The motion of this perfect and heavenly substance could only be in a circle, the perfect figure with no beginning or end. The rotational motion of the celestial sphere was explained, and the Aristotelian vision of the cosmos was to reign for more than a millenium. Not every heavenly body was accounted for. There were precisely seven more that were evidently not part of the celestial sphere: the Sun, the Moon, and the five planets. (Five, not nine; three were yet to be discovered and Earth was a very special place.) The profound significance of these celestial objects to ancient societies is reflected today in the names of the days of the week, given in Table 1 in three representative Western languages.

Table 1. Names of the Days of the Week

English | French | Latin | |||

Sunday | Dimanche | Dies Solis | |||

Monday | Lundi | Dies Lunae | |||

Tuesday | Mardi | Dies Martis | |||

| |||||

English | French | Latin | |||

Wednesday | Mercredi | Dies Mercurii | |||

Thursday | Jeudi | Dies Jovis | |||

Friday | Vendredi | Dies Veneris | |||

Saturday | Samedi | Dies Saturni | |||

In Latin, each day of the week corresponds to one of the heavenly bodies, and to its representative deity. In French, this correspondence is maintained, except during the weekend. The English day names are direct translations of the Latin, with the Anglo-Saxon deities Tiw, Woden, Frigg and Thor usurping their Latin equivalents. Curiously enough, Venus (the token woman deity) is replaced by Frigg, a.k.a. Mrs. Woden. The histories of religion, timekeeping and astronomy are intricately woven together.

The seven heavenly bodies move across the sky in a much more complex fashion than do the fixed stars. The Moon travels in circles, but different circles than the stars. This was easily accommodated by imagining the Moon to be attached to a second celestial sphere with its own circular motion. So also for the Sun, embedded upon a third serenely rotating sphere. The word “planet” stems from the Greek word for wanderer, and wander they do. Some of the planets even reverse the sense of their motion across the sky from time to time. The simple notion of seven rotating and concentric spheres had to be abandoned.

Still, circular motion was philosophically mandated for the quintessential heavenly bodies. Tricks were devised. Planets were to move in circles, but circles not centered about the stationary Earth: eccentric motion. Planets were to move in circles within circles: epicycles. Planets were to move in circles, but not at a constant rate as viewed from the center: equants.

Using eccentrics, epicycles, and equants, Ptolemy, with a great deal of hard work, formulated his system of heavenly motions in the second century A.D. The Earth was immobile in the very center of the universe. The past and future motions of the planets could be explained. Serious discrepancies between prediction and observation were resolved by small adjustments of the 70 simultaneous and independent motions of the seven bodies. While it was incredibly intricate and contrived, and the Ptolemaic system was understood by only a few, it was generally accepted; it worked, and it put Earth right in the middle, where Earth belongs. Alter the Dark Ages, when Arab guardians returned the Ptolemaic system to Europe, it soon became part and parcel of Christian dogma. {30}

Nicolaus Copernicus (1473–1543) was a devout scholar and a canon of the Roman Catholic church. A firm believer in the primacy of circular motion, he was disturbed by the complexity and arbitariness of the Ptolemaic theory. To Copernicus “. . . the planetary theories of Ptolemy and most other astronomers, although consistent with the numerical data seemed . . . to present no small difficulty . . . Having become aware of these defects, I often considered whether there could perhaps be found a more reasonable arrangement of circles . . . in which everything would move uniformly about its proper center (Copernicus despised equants), as the rule of absolute motion requires.”

Copernicus realized the price that must be paid for a simpler system was to abandon the fixed Earth, to put the Sun in the center of the universe, and to have the rotating Earth move in a circle about it. Far from putting forth such a radical notion himself, he appealed to prior authority, “. . . according to Cicero, Nicetas had thought the Earth moved . . . according to Plutarch certain others had held the same opinion . . . when from this, therefore, I had conceived its possibility, I myself also began to meditate upon the mobility of the Earth. And although it seemed an absurd opinion, yet, because I knew that others before me had been granted the liberty of supposing whatever circles they chose in order to demonstrate the observations concerning the celestial bodies, I considered that I too might well be allowed to try whether sounder demonstrations of the revolutions of the heavenly orbs might be discovered by supposing some motion of the Earth.”

The Copernican system was at least as predictive as Ptolemy's and very much simpler. All the planets, Earth included, could be thought to move on concentric spheres. Only a few small epicycles and eccentrics had to be added to give quantitative precision.

Copernicus' actions were completely consistent with his firm religious beliefs. His heliocentric theory was but a reflection of the mind of the Creator, its greater simplicity but a reaffirmation of the principles of Aristotle. Yet, Copernicus made very few converts. The old church and the new were firmly committed to the geocentric view. For is it not written in Scripture. “Then spoke Joshua to the Lord in the day when the Lord delivered up the Amorites before the children of Israel, and he said in the sight of Israel, Sun, stand thou still upon Gideon; and thou Moon in the valley of Ajalon. And the Sun stood still, and the Moon stayed, until the people had avenged themselves.” If the Lord could put the Sun temporarily to rest, then surely the Sun must move. Perhaps the Copernican system was useful to the church as a computational tool (the {31} calendar was getting a bit out of whack), but as a statement about the real world, it was heresy. Eventually, the Catholic Church was to put Copernicus' magnum opus on the index of forbidden books as “false and altogether opposed to Holy Scriptures” where it was to remain, banned to practicing Roman Catholics, until 1835.

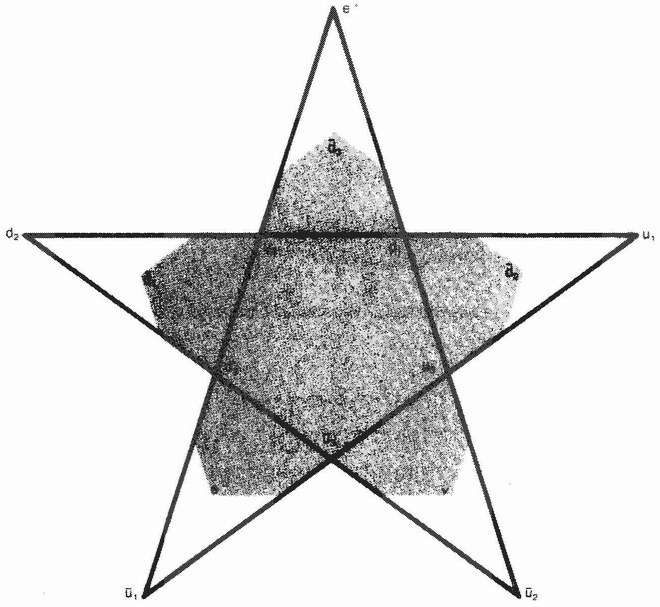

Johannes Kepler (1571–1630) was a convinced Copernican, whose purpose in life was the perfection of the heliocentric theory. Even more than Copernicus, he believed in the underlying simplicity of physical laws. For example, he asked why there should be exactly six planets, no more, no less. (Earth has become merely another planet in the Copernican view: a degrading heresy, but nonetheless true.) Kepler knew that there exist just five regular geometric solids: tetrahedron, cube, octahedron, dodecahedron, and icosohedron. Place these five solids within a series of six nested spheres, and the radii of the spheres will be in proportion to the solar distances of the six planets. This example of Kepler's laws is pure nonsense of course, but it is a true indication of his boundless imagination.

While the telescope had not yet been invented, the quality of astronomical data was far superior to what was available to Copernicus. This is largely due to the painstaking work of Tycho Brahe at his island observatory off Denmark. Measurement errors had been reduced by more than a power of 10. Kepler set himself the problem of fitting the new and precise data on Mars' orbit to his believed Copernican theory. After four years of study, he realized that the task could not be done—the theory was, quite simply, wrong. The motion of Mars is not a circle, nor even a circle as modified by epicycles, eccentrics, or equants. It is an ellipse. With this discovery, Kepler's faith in the fundamental simplicity of natural law was vindicated. The motions of each of the six planets could be fitted to a simple ellipse. No Ptolemaic tinkering was needed: eccentrics and epicycles joined equants in the great garbage heap of discarded physical theories.

Planets move in ellipses with the Sun at one focus. This is the first of Kepler's three great empirical rules, replacing the Aristotelian principle of circular motion. Planets, theologically speaking, were permitted to move in such imperfect orbits, since Kepler believed that they themselves were imperfect material bodies like Earth.

The second law replaces the notion of uniform motion: the line between the Sun and a planet sweeps out equal areas in equal times. The third law is the stunningly simple statement that the square of the period of a planetary orbit (its year) varies with the cube of the mean radius of {32} its orbit. This law has no Aristotelian precedent, since it relates the motions of different planets to one another.

Another way of phrasing the third law is to say that the mean speed of a planet varies with the reciprocal square root of its distance from the sun. To illustrate this law, let us provide in Figure 2 a graph of planetary speeds versus their distance from the Sun. See that the nine points lie on a smooth curve corresponding to 1/√R. The planet Mercury, being 1 percent of the distance from the Sun as Pluto, travels in its orbit 10 times more rapidly.

None of Kepler's three great laws was deduced from a consistent theoretical framework—this would be accomplished a century later by Isaac Newton. Kepler searched for and found his relationships by careful analysis and inspired guesswork. He was perhaps the most brilliant theoretical astronomer of any age, just as Tycho Brahe may have been the best observational astronomer ever. Kepler and Brahe preceded the institution of the Nobel Prize by three centuries (and there isn't even a prize for astronomy.)

|

Figure 2. Graphs of planetary speeds versus their distance from the Sun |

| {33} |

Supernovae are titanic stellar explosions, so great they may outshine the galaxy in which they appear. Rarely are there supernovae in our galaxy. One of the most spectacular took place in the year 1054. Chinese observations record that the star was so bright that it was visible all through the day, and could compete with the Moon at night. No European report of the event has been discovered. How great are the fear and blindness that a totalitarian faith can engender!

The most recent supernovae in our galaxy took place in 1572 and 1604. They are known as Tycho's supernova and Kepler's supernova. No astronomer since has earned such an award.

Galileo was born in the year Michelangelo died (1564) and he died in the year of Newton's birth (1642). Like Kepler, Galileo was a believer in Copernicus. Not nearly as mathematically inclined as Kepler, Galileo was never to accept his colleague's notion of ellipses. In 1609, having heard about the Dutch invention of the telescope, Galileo constructed one himself. He immediately realized its potential as an instrument of war: it was to provide a “distant early warning” of approaching enemy ships. The military-industrial complex of the Republic of Venice paid handsomely for the device.

Galileo's first telescopic observations confirmed his Copernican prejudices. Three examples: The Milky Way to the naked eye is just that— a diffuse, continuous, milky band across the sky. Through the telescope, it is revealed as untold thousands of individual stars. (Today we know that our galaxy, the Milky Way, contains about 1011 stars.) But, faith decreed that the universe had been fashioned solely for the joys and sorrows of mankind. For what purpose are these invisible stars which can only be seen by means of magic devices? (The telescope, being of evident military value, was never accused of being an accursed instrument of the devil.) Of our nearest celestial neighbor, Galileo wrote “the surface of the Moon is not smooth, uniform, and precisely spherical as a great number of philosophers believe it (and the other heavenly bodies) to be, but is uneven, rough, and full of cavities and prominences, being not unlike the face of the Earth, relieved by chains of mountains and deep valleys.” Galileo saw blemishes on the surface of the Moon and Sun. Heavenly bodies were not so perfect as faith decreed.

The most startling of Galileo's discoveries was that the four principal satellites of Jupiter: Io, Europa, Ganymede, and Callisto revolved about the planet. Their periods were found to obey Kepler's third law—an astonishing success of his model in an unanticipated new domain. No {34} rational person, viewing Jupiter and its moons, could any longer doubt the heliocentric theory.

Galileo's work was not universally accepted. Tricks could be played with lenses. Indeed, they must have been since Galileo's conclusions were metaphysically impossible:

There are seven windows in the head, two nostrils, two ears, two eyes, and a mouth; so in the heavens there are two favorable stars, two unpro-pitious, two luminaries, and Mercury alone, undecided and indifferent. From which and many similar other phenomena of Nature such as the seven metals, etc., which it were tedious to enumerate, we gather that the number of planets is necessarily seven . . . Besides, the Jews, and other ancient nations, as well as modern Europeans, have adopted the division of the week into seven days, and have named them from the seven planets: now if we increase the number of planets, this whole system falls to the ground . . . Moreover, the satellites are invisible to the naked eye, and therefore can have no influence on the Earth and therefore would be useless and therefore do not exist.

So argued the Florentine astronomer Francesco Sizzi in 1611.

Useless and invisible to the naked eye they may be, but Galileo's “Medicean Stars” (named after his patron) were there to be seen by anyone with access to a telescope. In the mid-seventeenth century, five moons of Saturn were added to the celestial bestiary. They too, satisfied Kepler's laws. Why did these simple laws work so well? The key to the puzzle was the realization that the natural state of motion of an undisturbed object, whether on earth or in the heavens, is a straight line. Secondly, it was necessary to realize that the force which makes bodies fall to earth extends into the heavens and is responsible for the motions of planets. Thirdly, powerful new analytical techniques were needed to deduce the nature of a planetary orbit from the assumed form of the force.

Isaac Newton possessed the brilliant mathematical talent needed to solve a problem that had baffled all before. Moreover, he was utterly convinced of the universality of physical laws. Celestial mechanics and terrestrial mechanics were, to Newton, one and the same discipline. Newton's hypothesis of a universal gravitational force, that two bodies attract one another with a force proportional to the mass of each body and inversely proportional to the square of the distance between them, enabled him to deduce Kepler's three laws. Much more than this, Newton showed that the very same force explained the motions of planets about {35} the Sun, satellites about their primaries, terrestrial falling bodies, the movements of the tides, and even the trajectories of comets. The mystery of the motions of celestial bodies had been solved with purely terrestrial concepts. Or, rather, many mysteries had been reduced to one simply stated mystery: What is gravity? Published in 1687, Newton's work remains today as the most momentous scientific revelation of all time.

The seventh planet was discovered by one William Herschel, .son of an oboist of the Hanoverian Footguards Band and an accomplished professional musician himself. In his spare time, Herschel built telescopes. Not ordinary telescopes, but the very best telescopes of his time. Herschel set himself the stupendous task of performing a complete and systematic survey of the heavens. In 1781, he discovered a curious new object which be believed to be a comet. Within months, it became clear that Herschel's comet was no comet at all, but a new planet. But the question of the name on the new planet was vexing. Herschel called it “The Georgian,” in honor of his patron, King George III, and so it was known in Great Britain. The French insisted on the name Herschel, in honor of its discoverer, and so it was known in France. Swedes and Russians suggested the name “Neptune,” but a careful reading of mythology suggested a better alternative. Uranus, god of the sky and husband to Earth, was father to Saturn and grandfather to Jupiter, who, in his turn, begat Mars, Venus, Mercury, and Apollo (or the Sun). By the mid-nineteenth century, the name “Uranus” became generally accepted. Incidentally, the 92nd chemical element, uranium, was discovered only a few years after Herschel's work, and so the element was named for the planet.

While the discovery of Uranus resulted from a pure accident, that of Neptune was a triumph for Newtonian theory and the scientific method. Careful observations in the early nineteenth century revealed irregularities in the orbit of Uranus. Two great theoretical astronomers attacked the problem, one in France and another in England. They postulated the existence of an eighth planet whose existence would perturb the motion of Uranus in just such a fashion as was observed. The paper that John Couch Adams left at the Royal Observatory on October 21, 1845, began, “According to my calculations, the observed irregularities in the motion of Uranus may be accounted for by supposing the existence of an exterior planet, the mass and orbit of which are as follows . . .” Meanwhile, and completely independently, Urbain Leverrier, in Paris, prepared a paper entitled “Sur la Planete qui produit les anomalies observees dans le mouvement d Uranus—determination de sa masse, dc son orbite, et de {36} sa position actuelle.” Each scientist explained precisely where and when to search the skies, and just what to look for. A short time later, on September 25, 1846, the Berlin observatory announced the discovery of the predicted planet. It was indeed, in the words of the director of the observatory “the most outstanding proof of the validity of universal gravitation,” Eventually the eighth planet was named “Neptune,” but not without a fight.

Still, there remained small, but certain, irregularities in the motions of the outer planets. A ninth planet was predicted by Percival Lowell in 1915, but because a powerful enough telescope had not yet been built to detect this faint and distant object, he did not live to see it discovered. Pluto was found by astronomers working at the Lowell Observatory at Flagstaff, Arizona in 1930 and announced on Lowell's birthday. Its astronomical symbol E is constructed out of its champion's initials. The first two transuranic elements, which do not occur naturally, were first synthesized at the Berkeley cyclotron. Coming in the periodic table, just after uranium, it is understandable that they were named neptunium and plutonium, after the last discovered planets. Today, the art of growing new elements has developed ever further, and there are now fourteen known transplutonic elements. The game of naming chemical elements has lost its charm, and the last few elements simply have numbers.