CONSTRUCTING

QUARKS

A SOCIOLOGICAL HISTORY

OF PARTICLE

PHYSICS

|

ANDREW PICKERING THE UNIVERSITY OF CHICAGO PRESS |

| {i} |

|

for Lucy The University of Chicago Press, Chicago 60637 Edinburgh University Press, 22 George Square, Edinburgh © 1984 Edinburgh University Press All rights reserved. Published 1984 Typeset and printed in Great Britain by The Alden Press, Oxford 92 91 90 89 88 87 86, 85 84 Library of Congress Cataloging in Publication Data Pickering, Andrew. Constructing quarks. Bibliography: p. Includes index. 1. Particles (Nuclear physics)—History. 2. Quarks— History. I. Title. QC793.16.P53 1984 539.7'21'09 84–235 isbn 0-226-66798-7 (cloth) isbn 0-226-66799-5 (paper) |

| {iv} |

ix | ||||||

Part One | ||||||

1. | 3 | |||||

2. | 21 | |||||

2.1 | 21 | |||||

2.2 | 23 | |||||

2.3 | 32 | |||||

3. | 46 | |||||

3.1 | 47 | |||||

3.2 | Conservation Laws and Quantum Numbers: | 50 | ||||

3.3 | 60 | |||||

3.4 | 73 | |||||

Part Two | ||||||

4. | 85 | |||||

4.1 | 85 | |||||

4.2 | 89 | |||||

4.3 | 108 | |||||

4.4 | 114 | |||||

5. | 125 | |||||

5.1 | 125 | |||||

5.2 | 132 | |||||

5.3 | 140 | |||||

5.4 | 143 | |||||

| ||||||

5.5 | Lepton-Pair Production, Electron-Positron | 147 | ||||

6. | Gauge Theory, Electro weak Unification | 159 | ||||

6.1 | 160 | |||||

6.2 | 165 | |||||

6.3 | 173 | |||||

6.4 | Electroweak Models and the Discovery of | 180 | ||||

6.5 | 187 | |||||

7. | Quantum Chromodynamics: A Gauge Theory | 207 | ||||

7.1 | 207 | |||||

7.2 | 215 | |||||

7.3 | 224 | |||||

8. | 231 | |||||

8.1 | 231 | |||||

8.2 | 235 | |||||

8.3 | 238 | |||||

Part Three | ||||||

9. | 253 | |||||

9.1 | 253 | |||||

9.2 | 254 | |||||

9.3 | 258 | |||||

9.4 | 261 | |||||

9.5 | 270 | |||||

10. | 280 | |||||

10.1 | 280 | |||||

10.2 | 290 | |||||

10.3 | The Standard Model Established: | 300 | ||||

| ||||||

11. | 309 | |||||

11.1 | 310 | |||||

11.2 | 311 | |||||

11.3 | 317 | |||||

12. | 347 | |||||

12.1 | 349 | |||||

12.2 | 353 | |||||

12.3 | 364 | |||||

13. | 383 | |||||

13.1 | 383 | |||||

13.2 | 388 | |||||

13.3 | 391 | |||||

13.4 | 396 | |||||

14. | 403 | |||||

14.1 | 403 | |||||

14.2 | 405 | |||||

14.3 | 407 | |||||

416 | ||||||

459 | ||||||

| {vii} |

Etched into the history of twentieth-century physics are cycles of atomism. First came atomic physics, then nuclear physics and finally elementary-particle physics. Each was thought of as a journey deeper into the fine structure of matter. In the beginning was the nuclear atom. The early years of this century saw a growing conviction amongst scientists that the chemical elements were made up of atoms, each of which comprised a small, positively charged core or nucleus surrounded by a diffuse cloud of negatively charged particles known as electrons. Atomic physics was the study of the outer layer of the atom, the electron cloud. Nuclear physics concentrated in turn upon the nucleus, which was itself regarded as composite: the atomic nucleus of each chemical element was supposed to be made up of a fixed number of positively charged particles called protons plus a number of electrically neutral neutrons. Protons, neutrons and electrons were the original ‘elementary particles’ — the building blocks from which physicists considered that all forms of matter were constructed. However, in the post-World War ii period, many other particles were discovered which appeared to be just as elementary as the proton, neutron and electron, and a new specialty devoted to their study grew up within physics. The new specialty was known either as elementary-particle physics, after its subject matter, or as high-energy physics (hep), after its primary experimental tool — the high-energy particle accelerator.

Atomic physics, nuclear physics and even the early years of elementary-particle physics have all been subject to historical scrutiny, but the story of the latest cycle of atomism has yet to be told. Not content with regarding protons, neutrons and the like as truly elementary particles, in the 1960s and 1970s high-energy physicists became increasingly confident that they had plumbed a new stratum of matter: quarks. Gross matter was composed of atoms; within the atom was the nucleus; within the nucleus were protons and neutrons; and, finally, within protons and neutrons (and all sorts of other particles) were quarks. The aim of this book is to document and analyse this latest step into the heart of the material world.

The analysis given here is somewhat unconventional in that its thrust is sociological. Rather than treat the quark view of matter as {ix} an abstract conceptual system, I seek to analyse its construction, elaboration and use in the context of the developing practice of the hep community. My explanation of why the reality of quarks came to be accepted relates to the dynamics of that practice, a dynamics which is at once social and conceptual. I try to avoid the circular idiom of naive realism whereby the product of a historical process, in this case the perceived reality of quarks, is held to determine the process itself. The view taken here is that the reality of quarks was the upshot of particle physicists’ practice, and not the reverse: hence the title of the book, Constructing Quarks.

The sociology of science is often taken to relate purely to the social relations between scientists, and hence to exclude esoteric technical and conceptual matters. That is not the case here. There is no escape from such topics because the emphasis is on practice and, in hep, practice is irredeemably esoteric. This, of course, creates a communication problem: arcane practices are best described in arcane language, otherwise known as scientific jargon. To ameliorate the problem, I have sought to explain each item of hep jargon whenever it first enters the text. I have also cited popular accounts of the developments at issue, for the reader who feels he would benefit from more background on particular cases. In this way I have tried to make the text accessible to anyone with a basic scientific education. It is perhaps appropriate to add that the main object of my account is not to explain technical matters per se but to explain how knowledge is developed and transformed in the course of scientific practice. My belief is that the processes of transformation are easier to grasp than the full ramifications of, say, a given theoretical structure at a particular time. My hope is to give the reader some feeling for what scientists do and how science develops, not to equip him or her as a particle physicist.

There remain, nevertheless, sections of the account which may prove difficult for outsiders to physics. I have in mind here especially the passages in Part ii of the account which deal with the formal development of ‘gauge theory’. Gauge theory provided the theoretical framework within which quark physics was eventually set, and the development of gauge theory was intrinsic to the establishment of the quark picture. It would therefore be inappropriate to omit these passages from a historical account. However, the key idea which I use in analysing the development of theories is that of modelling or analogy and, unfortunately in the present context, gauge theory was modelled upon a highly complex, mathematically-sophisticated theory known as quantum electrodynamics (qed). Qed was taken for granted by physicists during the period we will be considering, and it {x} is beyond the scope of this work to go at all deeply into its origins. Thus, in discussing the formal development of gauge theory, I have to refer to accepted properties of qed (and quantum field theory in general) which I can only partially explain. This is the origin of the communication gap which remains in the text. On the positive side, I should stress that the more difficult phases of the narrative are non-cumulative in effect. For example, the uses to which particle physicists put gauge theory, discussed in Part in of the account, are much more easily understood than the prior process of theory development, discussed in Part II. The conceptual intricacies of the early discussions of field theory and gauge theory need not, therefore, deter the reader from reading on.

It remains for me to acknowledge some of my many debts: intellectual, material and financial. Taking the latter first, the research on which this book is based has been supported by grants from the uk Social Science Research Council. Without their support, it would have been impossible. The active co-operation of the hep community was likewise crucial, and I would like to thank the many physicists who found the time for interview and correspondence. In the following pages I argue that research is a social activity, and I am happy to apply the same argument to my own work. The account of particle physics offered here is intended as a contribution to the ‘relativist-constructivist’ programme in the sociology of scientific knowledge, and I owe a considerable debt to all of those working in this tradition. I am particularly grateful to Harry Collins, Trevor Pinch, Dave Travis and John Law. The Science Studies Unit of the University of Edinburgh has been my base for this work; it has supported me in many ways and I am indebted to everyone there, especially Mike Barfoot, Barry Barnes, David Edge, Bill Harvey, Malcolm Nicolson and Steve Shapin. Thanks also to Peter Higgs and the other members of the Edinburgh hep group for much information and many useful discussions. Moyra Forrest prepared the Index, and her assistance with library materials has also been invaluable. The typing of Carole Tansley, Margaret Merchant and, especially, Jane Flaxington has been heroic. To Jane F. likewise my thanks for life-support while this book was being written.

|

Edinburgh |

A.P. |

| {xi} |

PART I

INTRODUCTION,

THE PREHISTORY OF hep AND

ITS MATERIAL CONSTRAINTS

| {1} |

| {2} |

The scientist. . . must appear to the systematic epistemologist as an unscrupulous opportunist.

Albert Einstein1

The historian of modern science has to come to terms with the fact that the scientists have got there first. Very many accounts of the topics to be examined here have already been presented by particle physicists in the popular scientific press, as well as in the professional literature of high-energy physics (hep).2 These accounts all have a similar form — they are contributions to a well-established genre of scientific writing — and present a vision of science which is in some ways the mirror image — or reverse — of that developed in the following pages. I want therefore to begin by sketching out the archetypal ‘scientist's account’ of the history of hep, in order both to indicate its shortcomings and to introduce the motivations behind my own approach.3 This sketch will involve the use of some technical terms which will only be explained in later chapters, but the detailed meanings of the terms are not important in the present context.

The scientist's account begins in the early 1960s. At that time, particle physicists recognised four fundamental forces of nature, known, in order of decreasing strength, as the strong, electromagnetic, weak and gravitational interactions. The strong force, responsible for the binding of neutrons and protons in nuclei, was of short range and was the dominant force in elementary-particle interactions. The electromagnetic force, around 103 (i.e. 1000) times weaker than the strong force and acting at long range, was responsible for binding nuclei and electrons together in atoms, and also for macroscopic electromagnetic phenomena: light, radio waves and so on. The weak force, of short range and around 105 (100,000) times weaker than the strong force, had, except in special circumstances, negligible effects. Those special circumstances pertained to certain radioactive decays of nuclei and elementary particles, and to processes of energy generation in stars. Finally the gravitational force was a long-range force like electromagnetism. It was responsible for macroscopic gravitational phenomena — apples falling from trees, the earth orbiting the sun — but it was also 1038 times weaker than the {3} strong force, and its effects were considered to be completely negligible in the world of elementary particles.

Associated with this classification of forces was a classification of elementary particles. Particles which experienced the strong force were called hadrons. There were many hadrons, including the proton and neutron, the constituents of atomic nuclei. Particles which were immune to the strong force — the electron and a handful of other particles — were known as leptons. The picture began to change in 1964, with the proposal that the constituents had constituents: hadrons were to be seen as made up of more fundamental entities known as quarks. Although it left many questions unanswered, the quark model explained certain experimentally-observed regularities of the hadron mass spectrum and of hadronic decay processes. Moreover, in the late 1960s and early 1970s it was seen that quarks could explain the phenomenon known as scaling, which had recently been discovered in experiments on the interaction of leptons with hadrons. In the scientist's account quarks thus represented the fundamental entities of a new layer of matter. Initially, though, the existence of quarks was not regarded as firmly established, principally because experimental searches had failed to detect any particles having the distinctive properties postulated for them (i.e. their having fractional electric charges). Leptons were not subject to a parallel ontological transformation; unlike hadrons they continued to be regarded as truly elementary particles.

Early in the 1970s, new theories of the interactions of quarks and leptons began to be formulated. First came the realisation that the weak and electromagnetic interactions could be seen as manifestations of a single electroweak force within the context of a theoretical approach known as gauge theory. This unification, reminiscent of Maxwell's nineteenth-century unification of electricity and magnetism, carried with it the prediction of the existence of the weak neutral current, which was verified in 1973, and of charmed particles, which was verified in 1974. Meanwhile, it had been realised in 1973 that a particular gauge theory, known as quantum chromodynamics or qcd, was a possible theory of the strong interactions of quarks. It was found first to explain scaling, and later to explain observed deviations from scaling. It explained certain interesting properties of charmed and other particles, and various other hadronic phenomena. Therefore qcd became the accepted theory of the strong interactions. Quarks had still not been observed in isolation. But both electroweak theory and qcd assumed the validity of the quark picture, and thus the existence of quarks was established simultaneously with the establishment of the gauge-theory description of their interactions. In {4} the late 1970s, particle physicists were agreed that the world of elementary particles was one of quarks and leptons interacting according to the dictates of the twin gauge theories: electroweak theory and qcd. Finally it was noticed that since the unified electroweak theory and qcd were both gauge theories, they could, in their turn, be unified with one another. This last unification brought with it more fascinating predictions, which began to arouse the interests of experimenters in 1979. These predictions were not immediately verified, but many physicists were confident that they would be. Thus, not only was a new and fundamental layer of structure discovered, in the shape of quarks, but three forces previously thought to be quite different from one another — the strong, electromagnetic and weak interactions — stood revealed as but particular manifestations of a single force.

Apart from brevity, this sketch of the scientist's account of the history of quarks has the virtue that it names key developments. For example, it indicates that the quark idea was only one theoretical component in the developments we will discuss. The other component was gauge theory, which eventually provided the framework within which the interactions of quarks and leptons were understood. This is something to keep firmly in mind: it is impossible to understand the establishment of the quark picture without at the same time understanding the perceived virtues of gauge theory. The scientist's account also points to the role of new phenomena — scaling, neutral currents, charmed particles and the like — in supporting the quark-gauge theory view. The observation that the quark-gauge theory picture referred to new phenomena, quite different from those which supported the pre-quark view of the world, will also figure prominently in the following account. However, beyond specifying the key developments in hep, the scientist's account goes on, either explicitly or implicitly, to specify a relationship between them. I want now to discuss this relationship, in order to highlight where the present approach departs from that of the scientist.

In the scientist's account, experiment is seen as the supreme arbiter of theory. Experimental facts dictate which theories are to be accepted and which rejected. Experimental data on scaling, neutral currents and charmed particles, for example, dictated that the quark-gauge theory picture was to be preferred over alternative descriptions of the world. There are, though, two well-known and forceful philosophical objections to this view, each of which implies that experiment cannot oblige scientists to make a particular choice of theories.4 First, even if one were to accept that experiment produces unequivocal fact, it would remain the case that choice of a theory is {5} underdetermined by any finite set of data. It is always possible to invent an unlimited set of theories, each one capable of explaining a given set of facts. Of course, many of these theories may seem implausible, but to speak of plausibility is to point to a role for scientific judgment: the relative plausibility of competing theories cannot be seen as residing in data which are equally well explained by all of them. Such judgments are intrinsic to theory choice, and clearly entail something more than a straightforward comparison of predictions with data. Furthermore, whilst one could in principle imagine that a given theory might be in perfect agreement with all of the relevant facts, historically this seems never to be the case. There are always misfits between theoretical predictions and contemporary experimental data. Again judgments are inevitable: which theories merit elaboration in the face of apparent empirical falsification, and which do not?

The second objection to the scientist's version is that the idea that experiment produces unequivocal fact is deeply problematic. At the heart of the scientist's version is the image of experimental apparatus as a ‘closed’, perfectly well understood system. Just because the apparatus is closed in this sense, whatever data it produces must command universal assent; if everyone agrees upon how an experiment works and that it has been competently performed, there is no way in which its findings can be disputed. However, it appears that this is not an adequate image of actual experiments. They are better regarded as being performed upon ‘open’, imperfectly understood systems, and therefore experimental reports are fallible. This fallibility arises in two ways. First, scientists’ understanding of any experiment is dependent upon theories of how the apparatus performs, and if these theories change then so will the data produced. More far reaching than this, though, is the observation that experimental reports necessarily rest upon incomplete foundations. To give a relevant example, one can note that much of the effort which goes into the performance and interpretation of hep experiments is devoted to minimising ‘background’ — physical processes which are uninteresting in themselves, but which can mimic the phenomenon under investigation. Experimenters do their best, of course, to eliminate all possible sources of background, but it is a commonplace of experimental science that this process has to stop somewhere if results are ever to be presented. Again a judgment is required, that enough has been done by the experimenters to make it probable that background effects cannot explain the reported signal, and such judgments can always, in principle, be called into question. The determined critic can always concoct some possible, if improbable, {6} source of error which has not been ruled out by the experimenters.5

Missing from the scientist's account, then, is any apparent reference to the judgments entailed in the production of scientific knowledge -judgments relating to the acceptability of experimental data as facts about natural phenomena, and judgments relating to the plausibility of theories. But this lack is only apparent. The scientist's account avoids any explicit reference to judgments by retrospectively adjudicating upon their validity. By this I mean the following. Theoretical entities like quarks, and conceptualisations of natural phenomena like the weak neutral current, are in the first instance theoretical constructs: they appear as terms in theories elaborated by scientists. However, scientists typically make the realist identification of these constructs with the contents of nature, and then use this identification retrospectively to legitimate and make unproblematic existing scientific judgments. Thus, for example, the experiments which discovered the weak neutral current are now represented in the scientist's account as closed systems just because the neutral current is seen to be real. Conversely, other observation reports which were once taken to imply the non-existence of the neutral current are now represented as being erroneous: clearly, if one accepts the reality of the neutral current, this must be the case. Similarly, by interpreting quarks and so on as real entities, the choice of quark models and gauge theories is made to seem unproblematic: if quarks really are the fundamental building blocks of the world, why should anyone want to explore alternative theories?

Most scientists think of it as their purpose to explore the underlying structure of material reality, and it therefore seems quite reasonable for them to view their history in this way.6 But from the perspective of the historian the realist idiom is considerably less attractive. Its most serious shortcoming is that it is retrospective. One can only appeal to the reality of theoretical constructs to legitimate scientific judgments when one has already decided which constructs are real. And consensus over the reality of particular constructs is the outcome of a historical process. Thus, if one is interested in the nature of the process itself rather than in simply its conclusion, recourse to the reality of natural phenomena and theoretical entities is self-defeating.

How is one to escape from retrospection in analysing the history of science? To answer this question, it is useful to reformulate the objection to the scientist's account in terms of the location of agency in science. In the scientist's account, scientists do not appear as genuine agents. Scientists are represented rather as passive observers {7} of nature: the facts of natural reality are revealed through experiment; the experimenter's duty is simply to report what he sees; the theorist accepts such reports and supplies apparently unproblematic explanations of them. One gets little feeling that scientists actually do anything in their day-to-day practice. Inasmuch as agency appears anywhere in the scientist's account it is ascribed to natural phenomena which, by manifesting themselves through the medium of experiment, somehow direct the evolution of science. Seen in this light, there is something odd about the scientist's account. The attribution of agency to inanimate matter rather than to human actors is not a routinely acceptable notion. In this book, the view will be that agency belongs to actors not phenomena: scientists make their own history, they are not the passive mouthpieces of nature. This perspective has two advantages for the historian. First, while it may be the scientist's job to discover the structure of nature, it is certainly not the historian's. The historian deals in texts, which give him access not to natural reality but to the actions of scientists — scientific practice.7 The historian's methods are appropriate to the exploration of what scientists were doing at a given time, but will never lead him to a quark or a neutral current. And, by paying attention to texts as indicators of contemporary scientific practice, the historian can escape from the retrospective idiom of the scientist. He can, in this way, attempt to understand the process of scientific development, and the judgments entailed in it, in contemporary rather than retrospective terms — but only, of course, if he distances himself from the realist identification of theoretical constructs with the contents of nature.8

This is where the mirror symmetry arises between the scientist's account and that offered here. The scientist legitimates scientific judgments by reference to the state of nature; I attempt to understand them by reference to the cultural context in which they are made. I put scientific practice, which is accessible to the historian's methods, at the centre of my account, rather than the putative but inaccessible reality of theoretical constructs. My goal is to interpret the historical development of particle physics, including the pattern of scientific judgments entailed in it, in terms of the dynamics of research practice. To explain how I seek to accomplish this, I will sketch out here some of the salient features of the development of hep, and describe the framework I adopt for their analysis.9

The establishment of the quark-gauge theory view of elementary particles did not take place in a single leap. As we shall see, it was a product of the founding and growth of a whole constellation of experimental and theoretical research traditions structured around {8} the exploration and explanation of a circumscribed range of natural phenomena. Traditions within this constellation drew upon different aspects of the quark-gauge theory picture of elementary particles, and, as they grew during the late 1960s and 1970s, they eventually displaced traditions which drew upon alternative images of physical reality. Seen from this perspective, the problem of understanding the establishment of quarks and gauge theory in the practice of the hep community is equivalent to that of understanding the dynamics of research traditions. To see what is involved here, consider an idealised discovery process.

Suppose that a group of experimenters sets out to investigate some facet of a phenomenon whose existence is taken by the scientific community to be well established. Suppose, further, that when the experimenters analyse their data they find that their results do not conform to prior expectations. They are then faced with one of the problems of scientific judgment noted above, that of the potential fallibility of all experiments. Have they discovered something new about the world or is something amiss with their performance or interpretation of the experiment? From an examination of the details of the experiment alone, it is impossible to answer this question. However thorough the experimenters have been, the possibility of undetected error remains.10 Now suppose that a theorist enters the scene. He declares that the experimenters’ findings are not unexpected to him — they are the manifestation of some novel phenomenon which has a central position in his latest theory. This creates a new set of options for research practice. First, by identifying the unexpected findings with an attribute of nature rather than with the possible inadequacy of a particular experiment, it points the way forward for further experimental investigation. And secondly, since the new phenomenon is conceptualised within a theoretical framework, the field is open for theorists to elaborate further the original proposal.

One can imagine a variety of sequels to this episode, but it is sufficient to outline two extreme cases. Suppose that a second generation of experiments is performed, aimed at further exploration of the new phenomenon, and that they find no trace of it. In this case, one would expect that suspicion will again fall upon the performance of the so-called discovery experiment, and that the theorist's conjectures will once more be seen as pure theory with little or no empirical support. Conversely, suppose that the second-generation experiments do find traces which conform in some degree with expectations deriving from the new theory. In this case, one would expect scientific realism to begin to take over. The new phenomenon {9} would be seen as a real attribute of nature, the original experiment would be regarded as a genuine discovery, and the initial theoretical conjecture would be seen as a genuine basis for the explanation of what had been observed. Furthermore, one would expect further generations of experimentation and theorising to take place, elaborating the founding experimental and theoretical achievements into what I am calling research traditions.

This image of the founding and growth of research traditions is very schematic. It typifies, nevertheless, many of the historical developments which we will be examining, and I will therefore discuss it in some detail. In particular, I want to enquire into the conditions of growth of such traditions. I have so far spoken as though traditions are self-moving; as though succeeding generations of research come into being of their own volition. Clearly this image is inadequate as it stands: research traditions prosper only to the extent that scientists decide to work within them. What is lacking is a framework for understanding the dynamics of practice, the structuring of such decisions. I want, therefore, to present a simple model of this dynamics which will inform my historical account. The model can be encapsulated in the slogan ‘opportunism in context’.

To explain what context entails, let me continue with the example of two research traditions, one experimental the other theoretical, devoted to the exploration and explanation of some natural phenomenon. It is, I think, clear that each generation of practice within one tradition provides a context wherein the succeeding generation of practice in the other can find both its justification and subject matter. Consider the theoretical tradition: to justify his choice to work within it, a theorist has only to cite the existence of a body of experimental data in need of explanation. And fresh data, from succeeding generations of experiment, constitute the subject matter for further elaborations of theory. Conversely, for the experimenter, his decision to investigate the phenomenon in question rather than some other process is justified by its theoretical interest, as manifested by the existence of the theoretical tradition. And each generation of theorising serves to mark out fresh problem areas to be investigated by the next generation of experiment. Thus, through their reference to the same natural phenomenon, theoretical and experimental traditions constitute mutually reinforcing contexts. Without in any way committing oneself to the reality of the phenomenon, then, one can observe that through the medium of the phenomenon the two traditions maintain a symbiotic relationship.

This idea of the symbiosis of research practice, wherein the practice of each group of physicists constitutes both justification and subject {10} matter for that of the others, will be central to my analysis of the history of hep. I have explained it for the simple case of experimental and theoretical traditions structured around a particular phenomenon, because this is the archetype of many of the developments to be discussed. But it will also underlie my treatment of more complex situations — where, for example, many traditions interact with one another, or when the traditions at issue are purely theoretical ones. In itself, though, reference to context is insufficient to explain the cultural dynamics of research traditions. The question remains of why particular scientists contribute to particular traditions in particular ways. Here I find it very useful to refer to ‘opportunism’. The point is this. Each scientist has at his disposal a distinctive set of resources for constructive research. These may be material — the experimenter, say, may have access to a particular piece of apparatus — or they may be intangible — expertise in particular branches of experiment or theory acquired in the course of a professional career, for example.11 The key to my analysis of the dynamics of research traditions will lie in the observation that these resources may be well or ill matched to particular contexts. Research strategies, therefore, are structured in terms of the relative opportunities presented by different contexts for the constructive exploitation of the resources available to individual scientists.

Opportunism in context is the theme which runs through my historical account. I seek to explain the dynamics of practice in terms of the contexts within which researchers find themselves, and the resources which they have available for the exploitation of those contexts. It is, of course, impossible to discuss the entire practice of the hep community at the micro-level of the individual, and when discussing routine developments within already established traditions I confine myself to an aggregated, macro-level analysis in terms of shared resources and contexts. As far as experimental traditions are concerned this creates no special problems. Resources for hep experiment are limited by virtue of their expense; major items of equipment are located at a few centralised laboratories. Those facilities constitute the shared resources of hep experimenters. The interest of theorists in particular phenomena likewise constitutes a shared context. If the facilities available are adequate to the investigation of questions of theoretical interest, one can readily understand that an experimental programme devoted to that phenomenon should flourish. Similarly, the data generated within experimental traditions constitute a shared context for theorists. But a problem arises when one comes to discuss the nature of shared theoretical resources: what is the theoretical equivalent of shared {11} experimental facilities? One might look here to the shared material resources of theorists — the computing facilities, for example, which have played an increasingly significant role in the history of modern theoretical (as well as experimental) physics. But this would be insufficient to explain why quarks and gauge theory triumphed at the expense of other theoretical orientations within hep. Instead I focus primarily upon the intangible resource of theoretical expertise. To explain how I use this concept let me briefly preview the main features of theory development which emerge from the historical account.

The most striking feature of the conceptual development of hep is that it proceeded through a process of modelling or analogy.12 Two key analogies were crucial to the establishment of the quark-gauge theory picture. As far as quarks themselves were concerned, the trick was for theorists to learn to see hadrons as quark composites, just as they had already learned to see nuclei as composites of neutrons and protons, and to see atoms as composites of nuclei and electrons. As far as the gauge theories of quark and lepton interactions were concerned, these were explicitly modelled upon the already established theory of electromagnetic interactions known as quantum electrodynamics. The point to note here is that the analysis of composite systems was, and is, part of the training and research experience of all theoretical physicists. Similarly, in the period we will be considering, the methods and techniques of quantum electrodynamics were part of the common theoretical culture of hep. Thus expertise in the analysis of composite systems and, albeit to a lesser extent, quantum electrodynamics constituted a set of shared resources for particle physicists. And, as we shall see, the establishment of the quark and gauge-theory traditions of theoretical research depended crucially upon the analogical recycling of those resources into the analysis of various experimentally accessible phenomena.

In discussing the development of established traditions, then, my primary explanatory variables are the shared material resources of experimenters and the shared expertise of theorists. However, there remains the problem of accounting for the founding of new traditions and the first steps towards the establishment of new phenomena. Such episodes are not to be understood in terms of the gross distribution of shared material resources or expertise, but they pose no special problems because of that. I will try to show that these episodes are just as comprehensible as routine developments within established traditions, and are similarly to be understood. The only difference between my accounts of the development of traditions and of their founding is that to discuss the latter I move, perforce, from the macro-level of the group to the micro-level of the individual. I aim {12} to show that the founding of new traditions can be understood in terms of the particular resources and context of the individuals concerned, just as the elaboration of those traditions can be understood in terms of the shared resources and contexts of the groups involved.13

Having outlined the opportunism-in-context model, we can now return to the problem posed earlier of how scientific judgments pertaining to experimental fallibility and theoretical underdetermination can be related to the dynamics of practice. Consider first the fallibility of experiment. Whilst it is possible to argue that all experiments are in principle fallible, experimenters do not enter this as an explicit caveat when reporting their findings: they simply report that, say, a particular quantity has been measured to have a particular value (often within a stated margin of uncertainty). It is then up to their colleagues to decide whether or not to challenge them, and such decisions are related to decisions over future practice. If the theorist can find the resources for a constructive analysis of the data, one should not expect him to spend long periods of time in searching for ways to challenge the experiment. If the data, through the medium of theory, raise new problems for experiment to investigate, then neither will experimenters be disposed to probe deeply. In this way, the potential fallibility of experiments is rendered manageable.14 A parallel solution is available to the problem of the underdetermination of theory. While a multiplicity of different theoretical explanations may be conceivable for any set of data, not all of these will be attractive in terms of the dynamics of practice. Theorists may possess the appropriate expertise to articulate one or more in a constructive fashion, raising, say, new questions which experimenters can tackle; other theoretical proposals may be divorced from any significant pool of theoretical expertise and effectively meaningless; and yet others will lead to questions which experimenters cannot investigate with available techniques, and therefore fail to sustain a symbiosis between theory and experiment. A choice which is impossible to make on purely empirical grounds can thus be straightforward when construed in terms of the dynamics of practice.

The outline of the explanatory framework to be adopted here is now complete. The scientific judgments, which in the scientist's account are retrospectively legitimated by reference to the reality of theoretical entities and phenomena, will here be related to the dynamics of contemporary practice. That dynamics will be analysed as a manifestation of opportunism in context.15 However, before closing this discussion, I want to draw attention to an important {13} point which has been so far left implicit. I have stressed that the fallibility of experiment is rendered manageable through the symbiosis of experimental and theoretical research traditions, and this has a rather striking consequence. To say that all experiments are ‘open’ and fallible is to note that no experimental technique (or procedure or mode of interpretation) is ever completely unproblem-atic. Part of the assessment of any experimental technique is thus an assessment of whether it ‘works’ — of whether it contributes to the production of data which are significant within the framework of contemporary practice. And this implies the possibility, even the inevitability, of the ‘tuning’ of experimental techniques — their pragmatic adjustment and development according to their success in displaying phenomena of interest. If one takes a realist view of natural phenomena, then such tuning is both unproblematic and uninteresting, being simply a necessary skill of the experimenter, and the scientist's account correspondingly fails to pay any attention to it. But if one abstains from realism, tuning becomes much more interesting. Natural phenomena are then seen to serve a dual purpose. As theoretical constructs they serve to mediate the symbiosis of theoretical and experimental practice (and hence to make realist discourse retrospectively possible); and, at the same time, they sustain and legitimate the particular experimental practices inherent in their own production. To suspend my earlier strictures against the imputation of agency to inanimate objects for the sake of a symmetric formulation, one can speak of a symbiosis between natural phenomena and the techniques entailed in their production, wherein each confers legitimacy upon the other. Such a symbiosis is a far cry from the antagonistic idea of experiment as an independent and absolute arbiter of theory, and does, I think, call for more attention than it has so far received from historians and philosophers of science. Perhaps the best way to explore it is through detailed case-studies of individual experiments. Space permits the inclusion of only one such study here: the set-piece analysis of the discovery of the weak neutral current, in Section 6.5. But throughout the book I point to instances of technical tuning in less detailed analyses of individual experiments, and in the closing chapter I return to a discussion of its implications.16

To round off these introductory remarks, let me briefly review the major historical developments discussed in the following chapters. The story to be told is of the founding and growth of research traditions structured around the quark concept. The account accordingly focuses upon the period from 1964, when quarks were first {14} invented, to 1980, when quark-physics traditions had come to dominate research practice in hep.17 Within that period it is useful to distinguish between two constellations of symbiotic research traditions which I will refer to as the ‘old physics’ and the ‘new physics’. The old physics dominated practice in hep throughout the 1960s and was distinguished by a ‘common-sense’ approach to the subject matter of the field. By this I mean that experimenters concentrated their efforts upon the phenomena most commonly encountered in the laboratory, and theorists sought to explain the data so produced. Amongst the theories developed in this period were early formulations of the quark model, as well as theories related to the so-called ‘bootstrap’ conjecture which explicitly disavowed the existence of quarks. The old physics thus consisted of traditions of common-sense experiment, devoted to the investigation of the most conspicuous laboratory phenomena, which sustained and were sustained by both quark and non-quark traditions of theorising. It should be noted that gauge theory did not figure in any of the dominant theoretical traditions of the old physics. By the end of the 1970s the old physics had been almost entirely displaced by the new. Experimentally, the new physics was ‘theory-oriented’: experimenters had come to eschew the common-sense approach of investigating the most conspicuous phenomena, and research traditions focused instead upon certain very rare processes. Theoretically, the new physics was the physics of quarks and gauge theory. Once more, theoretical and experimental research traditions reinforced one another, since the rare phenomena on which experimenters focused their attention were just those for which gauge theorists could offer a constructive explanation.

Thus there were elements of continuity between the old and new physics: the quark concept, for example, was carried over from one to the other. But there were also discontinuities. The non-quark, bootstrap approach to theorising largely disappeared from sight, and the quark concept became embedded in the wider framework of gauge theory. And, more strikingly, experimental practice in hep was almost entirely restructured in order to explore the rare phenomena which were at the heart of the new physics. Thus the transformation between the old and new physics and the consequent establishment of the quark-gauge theory world-view involved much more besides conceptual innovation. It was intrinsic to the transformation that the particle physicists’ way oft interrogating the world through their experimental practice was transformed too. This is one of the most fascinating features of the history of hep and I will try to explain how and why it came about. One wonders whether other great conceptual {15} developments in the history of science — the establishment of quantum mechanics, for example — were associated with similar shifts in experimental practice. Unfortunately, much historical writing tends to reflect the scientist's retrospective realism, regarding experiment as unproblematic and therefore uninteresting, and it is hard to answer such questions at present.

The historical account, then, focuses upon the origins of the quark concept in the old physics and upon the establishment of the new physics of quarks and gauge theory, paying particular attention to the transformation between the old and the new. The structure of the narrative reflects these preoccupations. Part i, comprising this and the two following chapters, aims to delineate the context within which the history of quark physics was enacted. Chapter 2 gives some statistics on the size and composition of the hep community, discusses the general features of hep experiment, and outlines the post–1945 development of experimental facilities in hep. Chapter 3 sketches out the growth of the major traditions of hep theory and experiment between 1945 and 1964, the year in which quarks were invented. It thus sets the scene for the intervention of quarks into the old physics.

Part ii begins the story proper. It covers the period from 1964 to 1974, ten years which saw the old quark physics established and the embryonic new-physics traditions founded. Chapter 4 discusses the two earliest formulations of the quark model and the relationships of these with common-sense traditions of experiment. Chapter 5 discusses the founding of the first new-physics traditions: the experimental discovery in 1967 of a new phenomenon — scaling — and its explanation in terms of a third variant on the quark theme, the quark-parton model. Chapter 6 reviews the first impact of gauge theory upon the experimental scene. This came with the 1973 discovery of another new phenomenon, the weak neutral current, the existence of which had been predicted on the basis of unified electroweak gauge theory. Chapter 7 covers the development of a gauge theory of the strong interactions. This was quantum chromo-dynamics, which promised to underwrite the quark-parton model, and hence to make contact with experimental work on scaling phenomena. Chapter 8 concludes Part ii and has three objectives. First, it summarises the conceptual bases of electroweak gauge theory and qcd in preparation for Part in. Secondly, it provides an overview of the topics of active hep research interest in 1974, emphasising that gauge theory had had relatively little impact at this stage: the old physics — of both quark and non-quark varieties — still dominated experimental and theoretical hep. Thirdly, Chapter 8 discusses three {16} transitional biographies, seeking to analyse why theorists who grew up (professionally) in the old-physics era should have appeared at the forefront of the developments of Part iii.

Part in of the account deals with the establishment of the quark-gauge theory world-view in the period from 1974 to 1980. Its subject is the growth and diversification of the experimental and theoretical traditions of the new physics. Chapter 9 discusses a crucial episode in the history of the new physics: the discovery of the ‘new particles’ and their theoretical explanation in terms of ‘charm’. The discovery of the first of the new particles was announced in November 1974 and by mid-1976 the charm explanation had become generally accepted. This was ‘the lever that turned the world’ in terms of the transformation between the old and the new physics. Theoretically, the existence of quarks came to be regarded as unproblematically established (despite the continuing failure of experimenters to observe isolated quarks in the laboratory), a specific electroweak gauge theory was singled out for intensive investigation, and qcd was brought most forcibly to the attention of particle physicists. On the experimental plane, charmed particles became a prime target for investigation, and the new interest in gauge theory provided a context in which all of the traditions of new-physics experiment could flourish, eventually to the exclusion of all else. Chapter 10 reviews developments concerning electroweak theory in the latter half of the 1970s. More new particles were discovered, pointing to an expanded ontology of quarks and leptons; detailed experimentation on the weak neutral current culminated in consensus in favour of ‘the standard model’ — the simplest and prototypical electroweak theory. Chapter 11 reviews the theoretical development of qcd in symbiosis with a range of experimental traditions. Chapter 12 then examines the growth of the new physics from the perspective of experimental practice. It aims to show that by the late 1970s the phenomenal world of the new physics had been built into both the present and future of experimental hep; the world was, in effect, defined by experimenters to be one of quarks and leptons interacting as gauge theorists said they should. Quantitative data on the new-physics takeover of experimental programmes are presented. The transformation of experimental hardware in this takeover is discussed. And the predication of planning for future major facilities on the new-physics world-view is documented.

By the end of the 1970s, the new physics had established a stranglehold upon hep. Gauge theories of quarks and leptons dominated contemporary practice; the same theories constituted particle physicists’ visions of the future and, via the realist assumption, {17} their understanding of the past. In this sense, the story is complete with Chapter 12. But gauge theorists did not rest content with electroweak theory and qcd, and one further substantial chapter is included. Chapter 13 discusses the synthesis of electroweak theory with qcd in the so-called Grand Unified Theories or guts, and outlines the implications for experiment drawn from guts in the late 1970s. Here we shall see gauge theory permeating the cosmos in a symbiosis between hep theorists, cosmologists and astrophysicists, and we shall see experimenters disappearing down deep mines in search of unstable protons. Finally, Chapter 14 summarises the overall form of the narrative, and suggests that the history of hep should be seen as one of the social production of a culturally specific world.

1 | Schilpp (1949, 684). |

2 | A representative selection of popular articles by leading particle physicists are cited in the following chapters. Book length popular accounts have been given by Segre (1980) and Trefil (1980). Many historical reviews appearing in the professional hep literature are cited in the text. |

3 | In referring to the ‘scientist's account’ I do not wish to suggest that all scientists produce such accounts nor that their production is solely confined to scientists. I mean only to suggest that such accounts are routinely produced and defended by members of the scientific community. |

4 | For a variety of philosophical perspectives on the problems at issue here, see Duhem (1954), Feyerabend (1975), Hanson (1958), Harding (1976), Hesse (1974), Kuhn (1970) and Quine (1964). |

5 | This is illustrated in many recent case-studies. See, for example, Collins (1975a, 1981a), Collins and Pinch (1982), Knorr et al. (1981), and Pickering (1981a, 1981b, 1984). |

6 | The scientific habit of rewriting the history of science from the standpoint of currently accepted knowledge (and the pedagogical function of this activity) was first remarked on by Kuhn (1970). Latour and Woolgar (1979) suggest that such rewriting entails a two-stage process of ‘splitting’ and ‘inversion’. Scientists first argue about the meaning and significance of their research in terms of the nature of reality. If and when a consensus is reached, splitting can take place: particular theoretical constructs become regarded as preexisting attributes of the natural world, independent of the particular arguments and practices implicit in their establishment. Inversion is then possible: the reality of the theoretical constructs is used to explain the validity of these arguments and practices, and to indicate the invalidity of any arguments or practices which support an alternative construction of reality. |

7 | By texts, I refer both to standard historical sources — published and {18} unpublished scientific papers, scientific correspondence, laboratory notebooks and so on — and to interview material, which I have found very useful in constructing this account. |

8 | For more discussion of these methodological remarks, see Collins (1981b). |

9 | I should note here that throughout the narrative I will refer to the scientist's account of events as a foil for my own analysis. Philosophers may accuse me of assaulting a straw man. The scientist's version, they might argue, is a form of naive realism — appropriate to the unreflective practice of science, perhaps, but not a view which any philosopher would care to defend. My response would be that the thrust of my analysis is that the development of science should be understood in sociological terms, and almost any philosophical position would serve as a foil for this argument. I choose to take issue with the naive realist view of science which is at least widely held, even if philosophically disreputable. I might add that while most academic observers would acknowledge the failings of naive realism, the habit of putting the phenomena first — the attribution of agency to theoretical constructs — is hardly conspicuous by its absence from contemporary writing in the history and philosophy of science. |

10 | For interesting reflections on this point from a practising hep experimenter, see Deutsch (1958). |

11 | By referring to expertise, I want to point to the fact that science is not purely an articulated body of knowledge. The articulated knowledge of the community is sustained by, and developed in the context of, a wealth of unverbalised theoretical and experimental skills unevenly distributed amongst its members. For more discussion of this point and its consequences, see Kuhn (1970), Polanyi (1973) and Collins (1974, 1975b). |

12 | The importance of analogy in theory development has been discussed by many authors. For a philosophical discussion, see Hesse (1974). Masterman (1970) has identified Kuhn's (1970) notion of an ‘exemplar’ with that of a crude analogy. Knorr (1981) gives a sociologically oriented discussion. The utility of analogy in analysing experimental as well as theoretical practice is shown in Schon (1969). For applications to the history of hep, see Pickering (1980, 1981c). |

13 | Since such formulations seem conducive to accusations of idealism in certain quarters, I should make my position clear. I have no wish to deny reality — in the shape of experimental data — a role in the development of scientific knowledge. As I hope is clear in the historical account, experimental data are at the very heart of scientific argument over the reality of theoretical constructs. However, these arguments can encompass a variety of positions which call into question the meaning and validity of the data, and it seems therefore impossible to regard the data alone as forcing a particular outcome to the debate. Only when seen within a field of practice can the force ascribed to given data be understood. {19} |

14 | Let me reiterate that this is a point of principle, and is most certainly not an imputation of theoretical bias or bad faith either to individual experimenters or to the scientific community in general. Given that all experiments are fallible, even those most conscientiously performed, it seems inevitable that experimental adequacy should ultimately be assessed in terms of the data produced. |

15 | In regarding the dynamics of practice rather than natural phenomena as the motor of scientific change, the present account falls within the tradition of ‘constructivist’ approaches to the history and sociology of science. For other extended case-studies within this tradition, see Fleck (1979), Knorr-Cetina (1981), Latour and Woolgar (1979) and MacKenzie (1981). For more general discussions of the approach, see Barnes (1977, 1982) and Bloor (1976). Shapin (1982) provides an extensive review of the historical literature from this perspective. Barboni (1977) offers a well documented analysis of the dynamics of practice in experimental hep, but does not seek to relate his analysis to the content of the knowledge produced. |

16 | Many of the detailed case studies listed above illustrate the tuning of experimental techniques within a theoretically-defined context, for example, Collins (1975a), Pickering (1981a, 1984) and Fleck (1979). Further studies which focus on this issue are Galison (1982), Gooding (1982), Holton (1978) and Shapin (1979). |

17 | The designation of 1980 as a cut-off date for this study is somewhat arbitrary. Many of the developments to be covered were essentially complete by 1978, and that will provide a natural point for termination of much of the account. However, certain important developments concerning ‘grand unification’ only got under way in the late 1970s, and I will follow these into the 1980s. |

| {20} |

This chapter sets the scene for the historical drama to follow. Section 1 discusses the demography of the hep community. Section 2 reviews the basic hardware of hep experiment and the distinctively different patterns of its use in the old and the new physics. Section 3 outlines the chronology of accelerator construction, relating this to the fluctuating fortunes of old and new physics traditions of experimental research.

Hep has always been Big Science. In comparison with many other branches of pure research its expense is enormous. In 1980, for example, the annual expenditure of cern, the major European hep laboratory, was nearly 600 million Swiss Francs, and governmental support for hep in the United States ran to $343 million.1 This is a field in which only the rich can compete. The history of experimental hep has accordingly been dominated by the United States and Europe. Outside those areas, the only significant experimental programme has been that of the Soviet Union, which has, in general, lagged behind that of the West.2 The story to be told here is thus largely one of developments on either side of the North Atlantic.3

Hep emerged as a recognisable scientific specialty shortly after the end of World War ii and, with Europe devastated, the usa quickly took the lead. However, the Europeans made determined attempts to overhaul the Americans, and by the early 1960s had achieved rough comparability in manpower. In 1962, for example, there were estimated to be 685 practising elementary-particle physicists in Europe and 850 in the usa.4 These research communities grew continuously during the 1960s before stabilising in the early 1970s as governments began to resist the physicists’ ever-increasing requests for funds.5 By this stage, Europe had achieved pre-eminence in manpower and in the late 1970s the 3,000 European particle physicists easily outnumbered their 1,650 American colleagues.6

Throughout this book, then, we will be dealing with a cast of thousands, concentrated in the economically advanced countries but with representatives sprinkled all over the world. This cast, predominantly {21} white and almost entirely male, can be subdivided in various ways. One important distinction is that between theorists and experimenters. Theory and experiment constitute distinct professional roles within hep. Each form of practice is highly technical, drawing upon quite different forms of expertise, and it is rare to find an individual who successfully engages in both.7 During the 1970s, there were around two experimenters for every theorist.8 A second axis of division relates to the subject matter of hep research. As mentioned in the preceding chapter, particle physicists recognise four fundamental forces of nature: the strong, electromagnetic, weak and gravitational interactions. Amongst these, the electromagnetic interaction has been regarded as well-understood since the 1950s, while the gravitational force is too weak to have a measurable effect upon elementary-particle phenomena. Hep research has therefore primarily aimed at investigation of the strong and weak interactions, and studies of these forces have constituted two relatively distinct fields of practice: different experimental and theoretical strategies were considered appropriate to each. This was especially true in the era of the old physics, when a sizeable majority of particle physicists concentrated their energies upon the strong rather than the weak interactions.9 In the context of the new physics the distinctions in practice between research into the strong and weak interactions remained significant but became blurred. Theoretically, a unified framework for the strong, weak and electromagnetic interactions was developed; and many new physics experiments produced data relevant to theoretical models of both the strong and weak interactions.

Finally, a comment upon the institutional locus of hep research is needed. Particle physics is a pure science, with no evident practical application for the knowledge it produces. Its institutional base lies, as one would expect, in the universities, and this is where most theorists live and work. However, the expense entailed in building and running experimental facilities has increased continuously during the history of hep, and there has been a corresponding tendency to divorce these resources from individual universities in favour of regional, national and international laboratories. Teams of experimenters, usually drawn from several universities, assemble at such laboratories to perform specific experiments, often dispersing again to their home institutions to analyse their data.10 The laboratories typically also have their own permanent staff of researchers comprising both an experimental group and a theory group.11 What goes on within the laboratories is the subject of the next section.

| {22} |

Although technologically extremely complex and sophisticated, experiments in hep are in principle rather simple. As indicated in Figure 2.1, one takes a beam of particles and fires it at a target. Interactions take place within the target — some of the beam particles are deflected or ‘scattered’; often additional particles are produced — and the particles that emerge are registered in detectors of various kinds.

|

Figure 2.1. Layout of an hep experiment. |

Beam, target and detectors are the three important components in an hep experiment, and we can discuss them in turn. Historically, a variety of methods have been used for producing particle beams, but in the post-war era the workhorse of hep has been the synchrotron.12 A synchrotron consists of a continuous evacuated pipe in the form of a circular ring — a stretched-out doughnut — which is encased in a jacket of electromagnets. A bunch of stable particles — either protons or electrons — is injected into the ring at low energy, and the magnetic fields produced by the magnets are so arranged that the particles move in closed orbits around the ring. At a given point in each orbit the particles are given a radio-frequency ‘kick’ to increase their energy, and this kick is repeated until the particles within the machine attain the energy required. They are then extracted from their orbits and directed as a high-energy beam towards the experimental areas. One might imagine that beams of arbitrarily high energy could be produced by the administration of a sufficient number of kicks, but this is not the case. Every machine has an upper energy limit dictated {23} by its radius and the maximum strength of its electromagnets. As we shall see in Section 3, the trend in accelerator construction has been towards ever-higher maximum energies, attained by machines of ever-increasing size. The big machines of the 1950s had radii around 10 to 20 metres, and could be housed in a covered building; by the 1960s they had grown to around 100 metres, and were buried underground; by the 1970s radii had reached a kilometre; and Europe's projected big machine for the 1980s, lep, will have a radius of 4 kilometres.13

The stream of particles ejected from an accelerator is known as the primary beam. It can be used directly for experiment, or it can be used to generate secondary beams. In the latter case, the primary beam is directed upon a metal target where it creates a shower of different kinds of particles. Secondary beams of the desired species of particle are then singled out from the shower using appropriate combinations of electric and magnetic fields. In this way, beams of particles which are not amenable to direct acceleration — either because they are unstable and would disintegrate during the acceleration process, or because they are electrically neutral and hence immune to the electric and magnetic fields used in acceleration — are made available for experiment. The chosen beam, primary or secondary, is brought to bear upon the experimental target, and the interactions which take place within the target are interpreted as those between the beam particles and the target nuclei. Liquid hydrogen is often the target of choice, since this constitutes a dense aggregate of the simplest nuclei — single protons — and thus facilitates the interpretation of data. When studying rare processes, however, interaction rate rather than simplicity of interpretation may be a prime consideration. In this case a heavy metal target may be used, in order to present as many nucleons (neutrons and protons) to the incoming beam as possible.

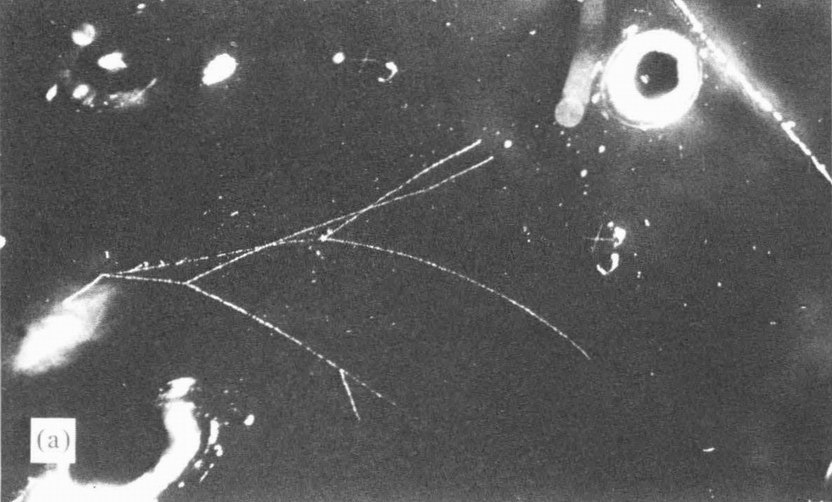

In a typical beam-target interaction several elementary particles are produced (the number increases with beam energy) and emerge to pass through detectors. A common characteristic of hep detectors is that they are only sensitive to electrically charged particles, since in different ways they all register the disruption produced by such particles in their passage through matter. Electrically neutral particles can only be detected indirectly, either by somehow converting them to charged particles and detecting the latter or, inferentially, by consideration of the energy imbalance between the incoming beam and the outgoing charged particles. Many different kinds of detectors have been used in hep over the years. The details of these need not concern us here, but a few words on the general tactics of particle {24} detection may be useful. Detectors can be classified into two groups — visual and electronic — and we can discuss examples of each in turn. Since its invention in 1953, the pre-eminent visual hep detector has been the bubble chamber.14 A bubble chamber is a tank full of superheated liquid held under pressure. When the pressure is released, the liquid begins to boil. Bubbles form preferentially along the trajectories of any charged particles which have recently traversed the chamber. Thus lines of bubbles mark out particle tracks, which can be photographed and recorded for subsequent analysis. Figure 2.2 reproduces a bubble-chamber photograph, where the particle tracks stand out as continuous white lines (the large white blobs are reference marks on the walls of the chamber).

|

Figure 2.2. Bubble-chamber photograph. |

In hep experiments the bubble chamber serves as both target and detector. The chamber is placed in the path of a particle beam and repeatedly expanded and repressurised. At each expansion, the tracks {25} within the chamber are photographed, yielding, in a typical experiment, many thousands of pictures of beam-target interactions or ‘events’. As noted above, the most popular filling for bubble chambers is liquid hydrogen, but when higher interaction rates are sought a heavier liquid (such as freon) may be substituted.

Bubble chambers, and other visual detectors, have two principal advantages for experimental hep. First, the visible tracks give direct access to elementary particles — if one forgets about philosophical niceties, one can imagine that one actually sees the particles themselves. And secondly, they generate a lot of data. A bubble chamber in a particle beam can record events much faster than they can be analysed. Historically, this was an important factor in the rapid post-war growth and diffusion of hep in universities around the world. It meant that university scientists with no accelerator at their home institution could visit an accelerator laboratory for a short time, collect a lot of data, and then return to base for months of profitable analysis. However, the productivity of visual detectors is a mixed blessing. They generate a lot of data because they are indiscriminate: they register everything that takes place within them, whether it is interesting or not. Much of the analysis of track data therefore consists of separating wheat from chaff: isolating phenomena of interest from uninteresting background.

Electronic detectors minimise the problem of separating wheat from chaff because they are discriminating. A discriminating detector is one which can be ‘triggered’ — programmed to decide whether to record what is taking place within it as interesting, or to discard the event as uninteresting. High-energy particles travel at almost the speed of light and traverse any detector array in a tiny fraction of a second. Thus the time-scale for triggering is of the order of nanoseconds (10–9 seconds). The only way to process information so quickly is electronically, and the requirement of a discriminatory detector therefore translates into that of a detector whose output is an electrical signal. The first device to satisfy this criterion was the scintillation counter, which came into use in hep in the late 1940s and has since remained an important weapon in the hep experimenter's arsenal.15 Scintillators are materials which emit a flash of light when struck by a charged particle. In his pioneering work on radioactivity, Rutherford had counted alpha-particles (helium nuclei) by eye by observing flashes on a scintillating screen of zinc sulphide. But scintillators only became useful for the purposes of hep when they were conjoined with the newly developed photo-multiplier tube (pmt). Pmts register flashes of light and amplify them electronically. Their output is an electronic pulse which can be fed directly into fast {26} electronic logic circuits, and thus arrays of scintillation counters-scintillating materials monitored by pmts — nicely fulfil the conditions for discriminating detectors. Spark chambers took their place alongside scintillation counters in the late 1950s.16 While the latter transform an optical signal into an electrical pulse, the former generate electrical signals directly. Descendants of the pre-war Geiger-Muller counter, the basic element of spark chambers is a pair of wires (or metal plates) to which a high voltage is applied. Charged particles traversing the gaseous medium of the chamber precipitate sparking between the wires. This generates an electrical pulse which can be fed into amplification and logic circuits in much the same way as the output of scintillation counters. During the 1970s highly sophisticated descendants of the spark chamber — multi-wire proportional chambers, drift chambers and streamer chambers — played an increasingly important role in experimental hep.17 They offered high-precision measurements conjoined with refined triggering capability, and threatened to make the bubble chamber redundant. Figure 2.3 shows the output from an electronic detector of the early 1980s. The white dots represent the firing of individual counters, but the eye readily reconstructs them as continuous particle tracks.

|

Figure 2.3. Output from an electronic detector array. |

One last component of hep hardware remains to be discussed: the particle collider. The particle accelerators so far mentioned are fixed-target machines — their beams are directed upon experimental targets at rest in the laboratory. Colliders have no such fixed targets. {27} They store counter-rotating beams of particles and clash them together. In colliders, the target is itself a beam of particles: two counter-rotating beams of particles are stored within a single ring or within two interlaced rings, and interactions take place at the predetermined points where the beams cross one another. The advantage which colliders enjoy over fixed-target machines is the following. In fixed-target experiments only a fraction of the beam energy — known as the centre-of-mass (cm) energy — is available for physically-interesting processes; the remaining energy is effectively locked up in the overall motion of the beam-plus-target system relative to the laboratory. In a collider this is not the case. Because the two beams collide head on, the beam-target system is stationary relative to the laboratory, and all of the beam energy is cm energy. Thus cm energy in colliders grows linearly with beam energy (it is just twice the energy of a single beam) while, according to the theory of special relativity, cm energy in fixed-target experiment grows only as the square-root of beam energies. Maximum beam energy is a major determinant of accelerator cost, and colliders therefore offer a very cost-effective route to high cm energies. As an experimental tool, however, colliders are not without certain disadvantages. In fixed-target experiments, one fires a tenuous beam at a dense target; in colliders one fires a tenuous beam at another tenuous beam, and the reaction rate is correspondingly reduced. Broadly speaking, therefore, the collection of comparable data requires considerably more time and effort at a collider than at a fixed-target machine. Furthermore colliders are limited to the investigation of interactions between stable, electrically charged particles — electrons, protons and their antiparticles — and offer a highly restricted range of experimental possibilities relative to fixed-target machines with their wide variety of secondary beams.18

We can now turn from the hardware of hep experiment to its substance. What do hep experimenters seek to find out, and how do they assemble their resources to do so? A typical hep experiment measures ‘cross-sections’. Cross-sections are quantified in ‘barns’, unitsofarea(1 barn = 10–24 cm2) which represent the effective area of interaction between beam and target particles. Cross-sections are best thought of as measures of the relative probabilities of different kinds of events: a high cross-section corresponds to a highly probable kind of event, a low cross-section to a rare one. Cross-section data constitute the empirical base of particle physics. For future reference, it will be useful here to introduce some technical distinctions between {28} different types of cross-section. The total cross-section (denoted ‘σtot’) for the interaction of two species of particles measures the overall probability that they will interact together in any way. In self-evident notation, it refers to the process AB ⟶ X where A and B are the beam and target particles, and X represents all conceivable products of their interaction. Total cross-sections can be regarded as sums of various partial cross-sections defined by specifying X. The most basic partial cross-sections are exclusive cross-sections, in which X is fully specified. Thus one might measure the exclusive cross-section for the process AB ⟶ AABBB, in which one A and two B particles are produced. Exclusive cross-sections can be further divided into elastic and inelastic cross-sections. Elastic cross-sections refer to processes in which no new particles are produced: AB ⟶ AB; inelastic cross-sections refer to processes in which new particles are produced: AB ⟶ AAAB, for example. Detailed measurements on inelastic processes in which many particles are produced are very difficult, and in this case experimenters often measure inclusive cross-sections. In such measurements X is only partially specified by, say, insisting that it contains at least one A particle: AB ⟶ AZ, where no attempt is made to identify the particle or particles comprising Z.